DeepSeek For RAG: How Deepseek Will Affect RAG Pipelines

Learn how DeepSeek is revolutionizing Retrieval-Augmented Generation (RAG) by focusing on efficiency over scale. Its adaptive reasoning, real-time data retrieval, and cost-effective AI infrastructure make advanced AI more accessible while reducing costs and environmental impact.

The AI industry is obsessed with advancement, and for years, the default path to improving RAG systems has been scaling up their underlying LLMs. While major players focused on enhancing RAG through increasingly sophisticated language models, a Chinese AI startup took a different approach—disrupting the game by optimizing the efficiency and adaptability of the data pipelines that power them.

Let’s take a deep dive into the industry-wide influence of DeepSeek on RAG-based systems, its impact on AI infrastructure markets, and how it compares to other highly-rated LLM models.

Why DeepSeeks Is A Game-Changer

What sets DeepSeek apart is its focus on reasoning-driven AI, using efficient algorithms and techniques like multi-stage training, data adaptability, and distillation to deliver high performance with significantly fewer resources.

Multi-disciplinary Applications

DeepSeek bridges retrieval precision with adaptive reasoning, enabling applications across disciplines. For instance, it can combine climate science data with economic models to forecast disaster impacts. By moving beyond static datasets, DeepSeek offers a real-time, context-aware framework for decision-making [7], [8].

Levels the Playing Field

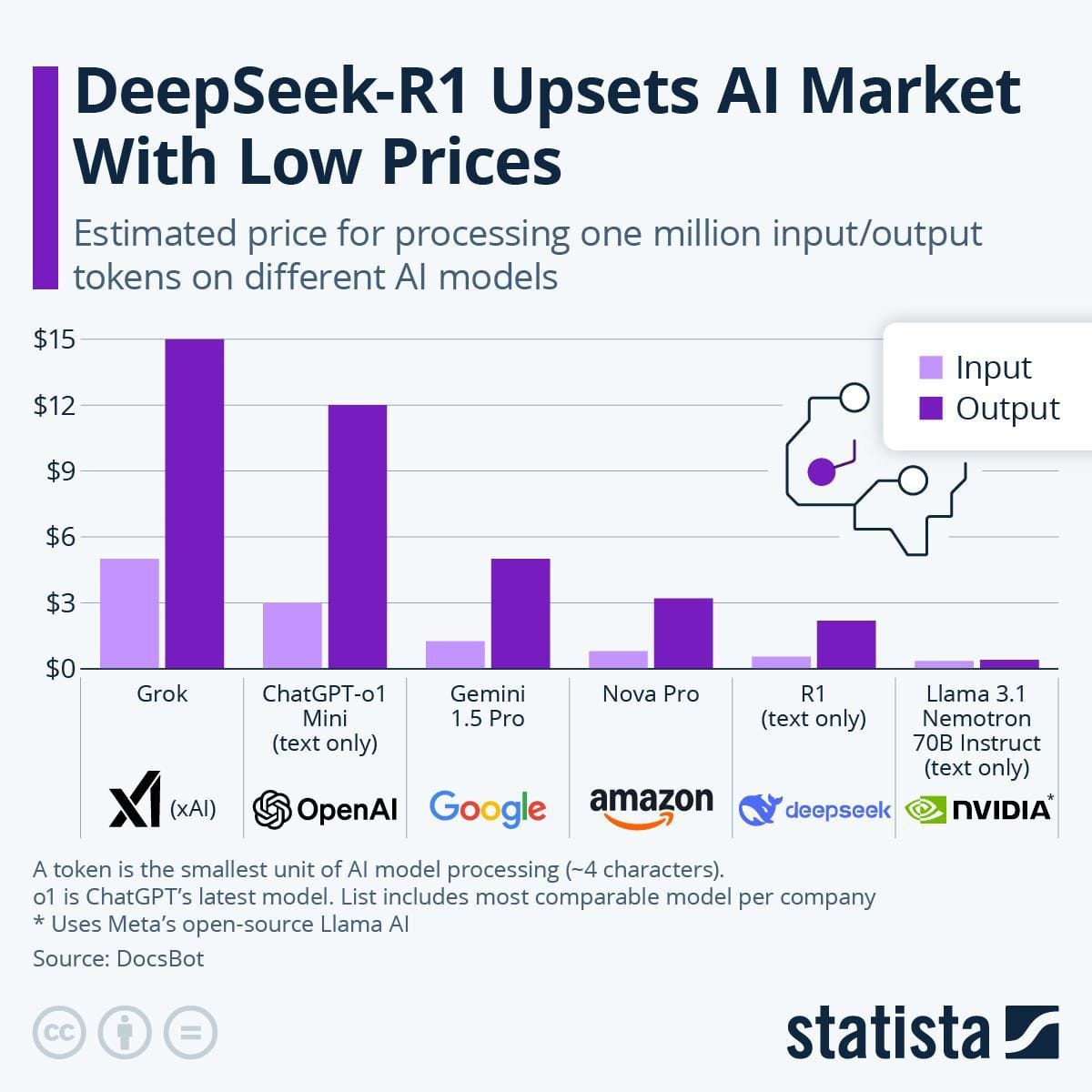

Deep Seek R1 models rival the reasoning ability of OpenAI’s o1 models but at a fraction of the computational cost [3], [4]. The open-source LLM's significantly cheaper pricing plans and lower hardware requirements make it accessible to developers working on projects with limited resources.

Improved Performance

The impact goes beyond lowering barriers for smaller players in the AI world. DeepSeeks improved reliability through live data processing can transform operations, especially in high-stake fields like finance and healthcare, where real-time insights are critical. Its versatility across areas like climate modeling and personalized education further shows its potential to tackle complex challenges.

Reducing the Green House Effect

One of the major challenges in AI is its carbon footprint. AI systems rely heavily on GPUs, which consume significant computational resources and emit harmful gases. Before DeepSeek, advancements in RAG applications were directly tied to the increasing size of AI LLM models. DeepSeek significantly cuts the use of resources, running a 671B-parameter LLM on affordable consumer hardware, helping to mitigate the environmental impact associated with large-scale AI systems.

How Does DeepSeek Enhance the Efficiency of RAG Systems?

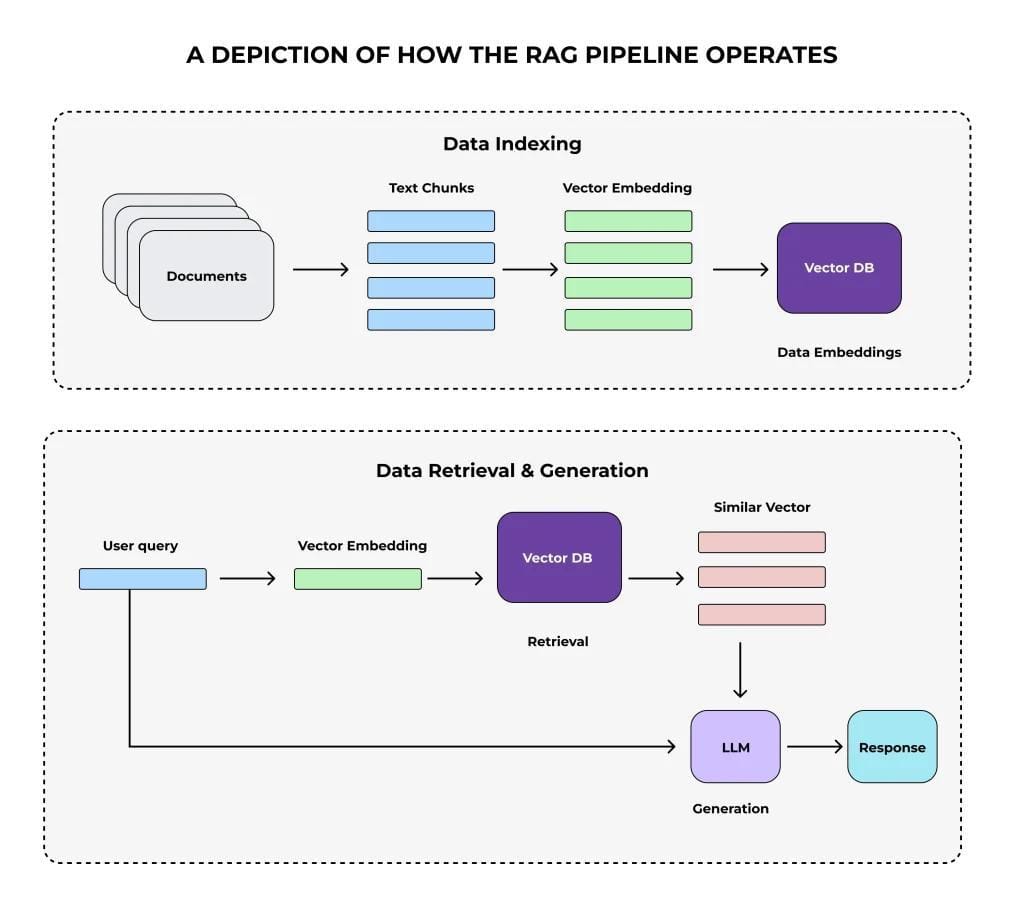

Research in RAG systems has been tunnel-visioned towards optimizing retrieval and generational processes. DeepSeek changed the game by focusing on real-time data adaptability using adaptive vector embeddings that evolve dynamically based on a user’s input, data chunking strategies, and multi-stage training to improve the relevance of retrieved data and reduce computational costs.

Real-time Data Retrieval

Unlike traditional RAG pipelines, which rely on static, pre-processed datasets to train AI systems for real-world applications, DeepSeek embeds real-time data retrieval directly into the pipeline, allowing models to quickly adapt to changing contexts with remarkable speed and accuracy.

While general RAG pipelines focus on broad semantic searches, they fall short in specialized fields like law or medicine which require precision and compliance with regulations. DeepSeek improves search by adaptive vector embeddings, which evolve based on real-time user interactions and feedback.

Unlike static embeddings, these dynamically adjust to maintain alignment with user intent, even for complex queries. This makes DeepSeek a handy tool for market analysis. It can prioritize recent market trends over historical data in financial modeling, improving decision-making accuracy. T

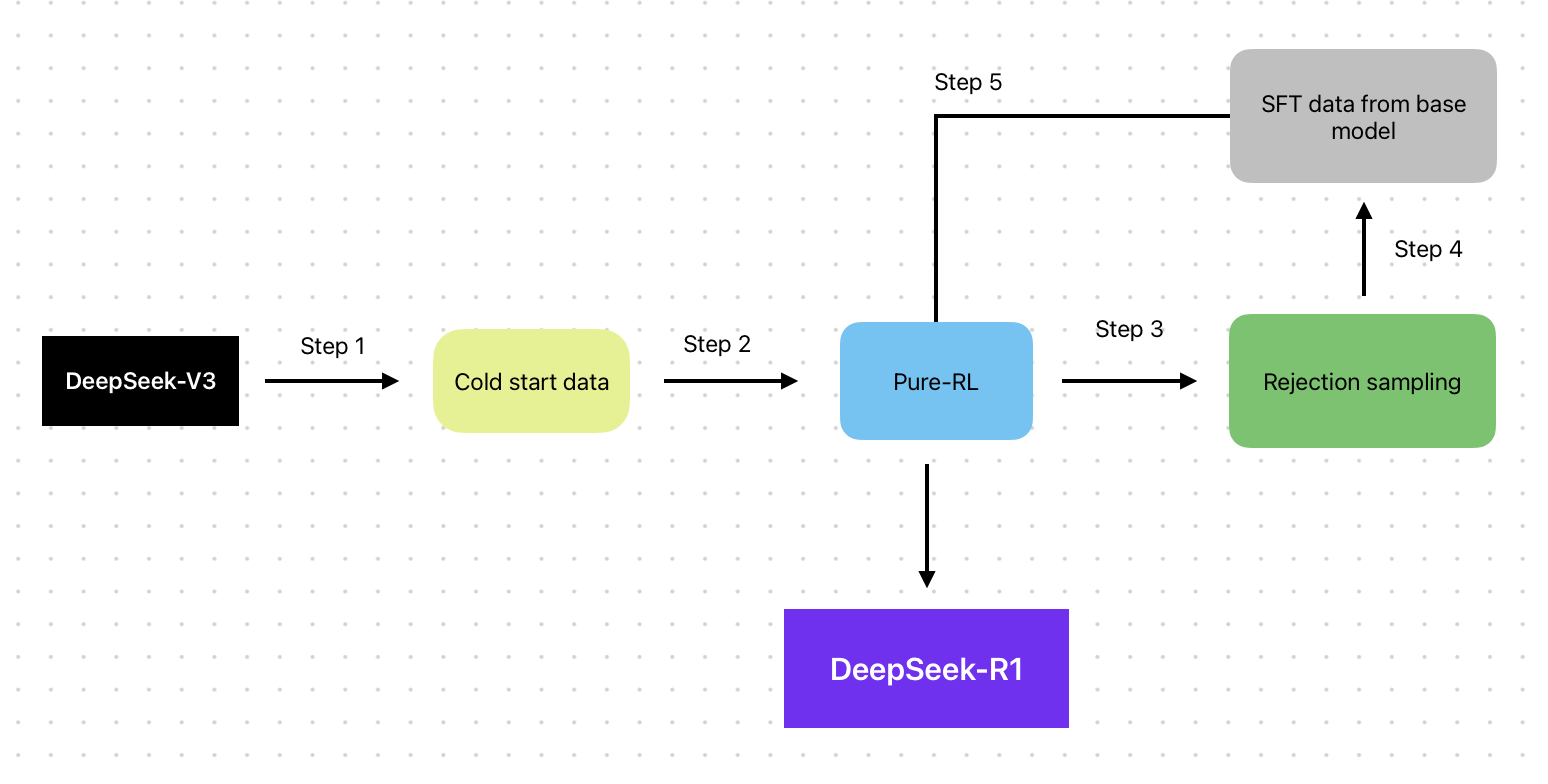

Usually, achieving this level of adaptability means incurring substantial computational costs. However, DeepSeek-R1 overcomes this challenge by employing rejection sampling, multi-stage training, and reinforcement learning (RL)--based distillation, to optimize performance without inflating hardware demands [12], [13]. This efficiency is particularly evident in resource-constrained environments, such as mobile applications, where smaller distilled models maintain high reasoning accuracy.

Expert perspectives highlight that DeepSeek’s multi-stage training pipeline mirrors neuroplasticity, allowing the system to refine its reasoning capabilities over time. This adaptive learning framework not only reduces computational costs but also mitigates the risk of reinforcing errors—a critical advantage over static models.

In e-commerce applications, DeepSeek-R1 has been deployed to refine product recommendations [14], [10]. The model adjusts its embeddings to prioritize products that align with emerging consumer preferences by analyzing click-through rates and purchase patterns. A case study from a leading online retailer revealed a 12% increase in conversion rates after implementing this adaptive mechanism, underscoring its commercial viability.

Data Chunking

Before now, it was common knowledge that larger data sets improve RAG performance. DeepSeek squashed this illusion with hierarchical chunking algorithms which yield better results by structuring data in context-preserving segments. The health sector has experienced an 18% reduction in irrelevant retrievals due to the application of data chunking [6], [14].

In-Memory Caching

A lesser-known factor influencing outcomes is the latency introduced by data transformation processes [12]. DeepSeek mitigates this by employing in-memory processing tools like Redis [9], [10], which reduce response times and enhance the user experience. This approach challenges the conventional wisdom that larger models inherently deliver better results, demonstrating that efficiency and scalability can outperform sheer computational power.

This tremendously improves the performance of AI models in financial risk analysis. By integrating live market data with historical trends, DeepSeek-R1 can generate actionable insights in milliseconds, outperforming legacy systems that struggle with latency [2]. A case study from a leading investment firm demonstrated a 23% reduction in decision-making time when transitioning to DeepSeek-R1-powered pipelines, directly impacting portfolio performance.

Impact of DeepSeek on AI Infrastructure Investments

Due to DeepSeek’s impact on the computation requirements for running RAG systems, we can expect consumer behavior to pivot from owning costly infrastructure to using flexible, usage-based models. Traditional AI investments often focus on large-scale GPU clusters, which require high upfront costs and continuous maintenance. However, Infrastructure-as-a-Service (IaaS) and decentralized GPU networks, like those from Aethir, are changing this model.

IaaS is effective because it allows businesses to scale resources based on real-time needs. For example, e-commerce platforms using DeepSeek’s adaptive RAG pipelines can adjust GPU usage during busy shopping seasons and reduce it during slower periods, lowering operational costs while meeting the demands of latency-sensitive tasks, like fraud detection in finance.

Another benefit of these models is cost transparency. Businesses pay only for the resources they use, making budgeting easier and reducing financial risk. This is especially useful for startups and smaller companies that may not have the capital to invest in their own infrastructure. IaaS helps democratize access to advanced AI, encouraging innovation.

Contrary to traditional thinking, decentralized infrastructure models can offer better resilience and accessibility. For example, decentralized GPU clouds reduce the risks of single-point failures, ensuring service continuity during outages.

Businesses can thrive in the shifting AI landscape by combining the scalability of IaaS with strategic ownership of essential infrastructure, striking a balance between flexibility and control for long-term success.

Open-source Collaborations and Contributions

Open-source contributions have changed the course of AI development, and DeepSeek is a great example of this shift, fostering a collaborative ecosystem that speeds up innovation. Unlike proprietary models that work in isolation, DeepSeek’s open-source framework encourages global participation, allowing researchers and developers to create solutions for different industries.

Only a few months after launching its first-generation model, DeepSeek has seen a 12% improvement in retrieval accuracy in fields like legal research and healthcare, thanks to community-driven enhancements to its adaptive vector embeddings.

One of the strongest aspects of DeepSeek’s open-source approach is its ability to crowdsource benchmarks. By using real-world datasets contributed by the community, DeepSeek ensures its models are tested in various contexts. A recent hackathon helped optimize DeepSeek’s hierarchical chunking algorithm, reducing retrieval noise by 18% in multilingual datasets. This ongoing feedback loop strikes two birds with one stone: refining the model and increasing the accessibility of advanced AI accessible.

Many people argue that open-source models lack the reliability of proprietary systems. However, DeepSeek takes care of these concerns using modular APIs and community-led audits to ensure transparency, accountability, and high performance. As one expert put it, “Open-source AI isn’t just an alternative; it’s a driver for fair innovation.”

To picture this, think of DeepSeek as a network of human expertise, with each contributor acting like a node, enhancing the system’s overall intelligence—similar to how biological systems grow through collective input.

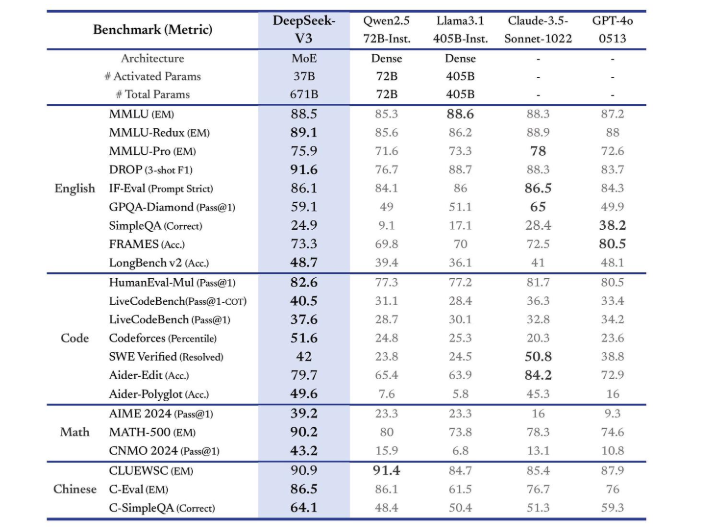

Benchmarks: DeepSeek vs Other Popular LLM Models

DeepSeek-R1 leverages RL to continuously refine its retrieval and reasoning processes, allowing it to adapt to user queries. This has led to impressive benchmark results, such as AIME 2024 (Pass@1: 79.8%) and MATH-500 (Pass@1: 97.3%).

DeepSeek-R1 combines supervised fine-tuning with RL to implement a multi-stage training pipeline that allows it to strike a balance between precision and generalization. This structured approach ensures strong performance in both narrow and broad contexts.

This advanced training technique allows DeepSeek-R1 to excel in domain-specific tasks. It beat OpenAI-o1-1217 with a score of 49.2% in software engineering tasks evaluated on the SWE-bench.

Real-world applications further highlight DeepSeek-R1’s capabilities. In healthcare, it has reduced diagnostic error rates by 18% by analyzing patient records with its hierarchical chunking mechanism, ensuring the retrieval of only the most relevant data.

An important yet lesser-known factor in its performance is DeepSeek-R1’s Sparse Mixture-of-Experts architecture. This architecture activates only the necessary components during inference, reducing computational costs and improving scalability, making it ideal for resource-constrained environments.

As seen from the benchmark, DeepSeek-R1 challenges the belief that larger models are always superior. Its success highlights the value of task-specific optimization, pointing toward a future where smaller, more efficient models lead in specialized AI applications.

Bottom Line

DeepSeek is counter-intuitive to the bigger-is-better culture in AI development. Instead, it emphasizes the value of optimizing data pipelines and reasoning systems, setting a new standard for sustainable and scalable AI innovation.

By prioritizing reasoning over raw scale, DeepSeek-R1 not only enhances RAG pipelines but also sets a new benchmark for cost-effective, domain-specific AI solutions. In a nutshell, DeepSeek redefines efficiency by prioritizing adaptability, precision, and resource optimization. These innovations collectively position RAG systems as scalable solutions for dynamic, data-intensive environments.