Performance Analysis: DeepSeek vs Traditional RAG Models

This performance analysis compares DeepSeek with traditional RAG models, evaluating speed, accuracy, and efficiency. Learn how DeepSeek stacks up against existing retrieval-augmented generation systems for AI-driven applications.

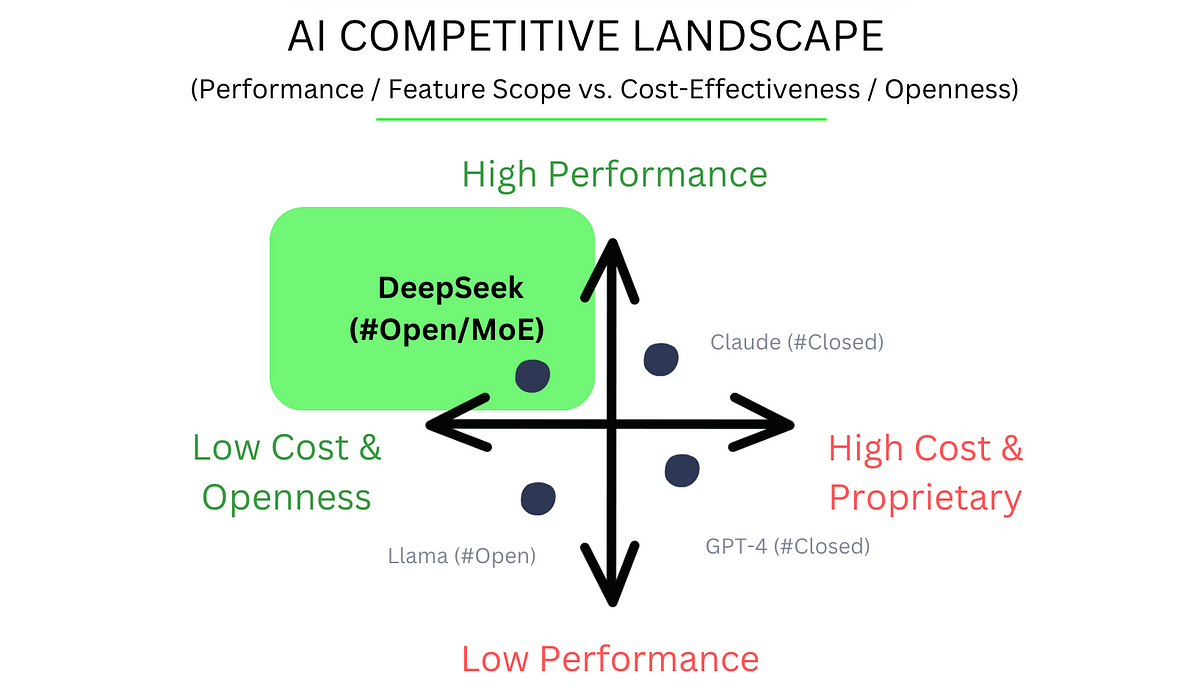

Imagine this: a retrieval-augmented generation (RAG) model that not only matches but often surpasses the performance of industry giants like OpenAI’s o1—all while being open-source and cost-effective.

Sounds too good to be true?

Enter DeepSeek R1, a model quietly redefining the benchmarks for retrieval efficiency and response accuracy.

This article dives into these nuances, challenging assumptions about what makes a RAG model “better” and exploring whether DeepSeek R1 is the disruptor the AI world has been waiting for.

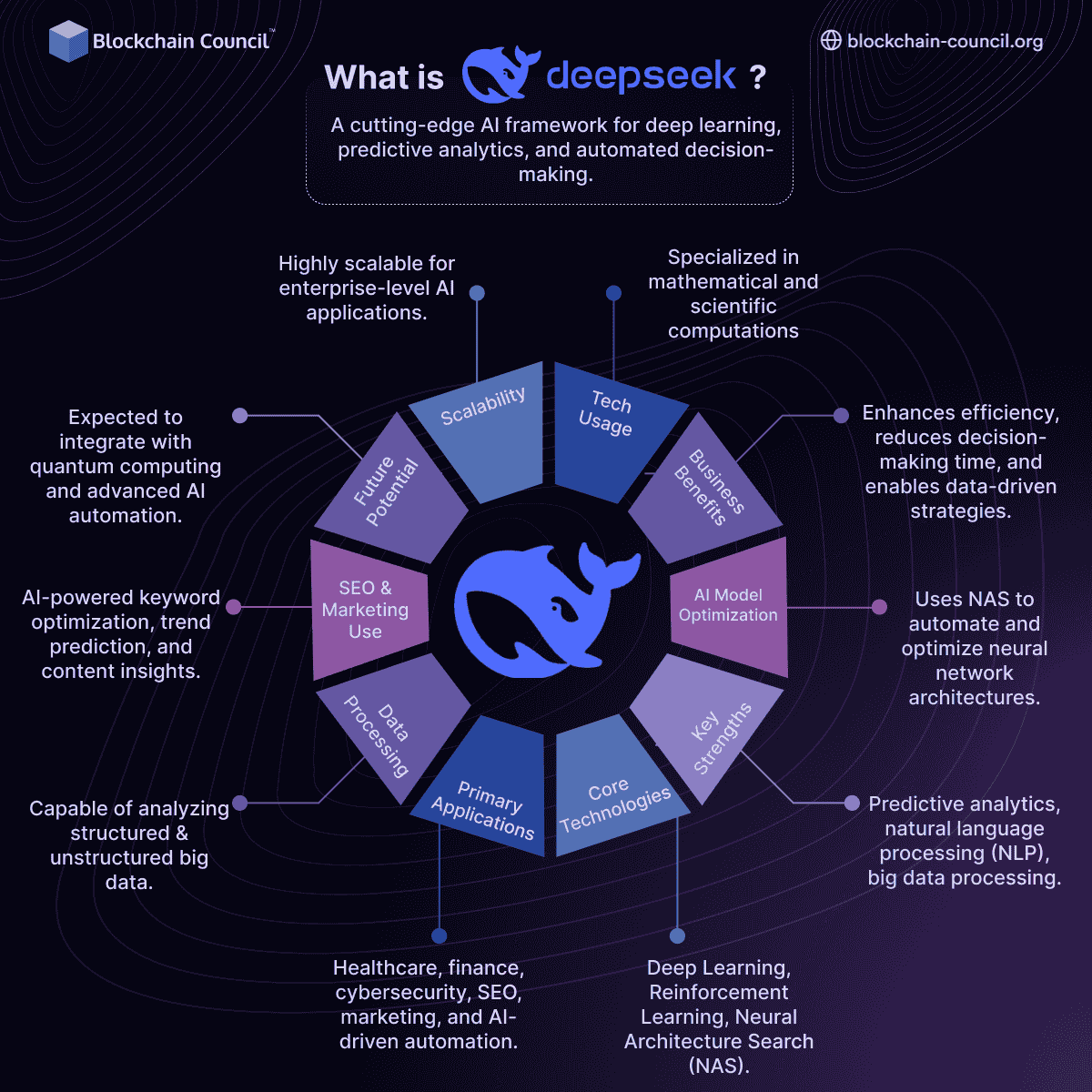

Overview of DeepSeek Technology

DeepSeek R1’s standout feature is its chain-of-thought (CoT) reasoning, which makes its decision-making process transparent.

Unlike traditional RAG models that treat retrieval and generation as isolated steps, DeepSeek R1 integrates them into a seamless pipeline.

This approach ensures that retrieved data directly informs logical reasoning, reducing errors and improving response accuracy.

Here’s why this matters: in industries like finance, where precision is non-negotiable, DeepSeek R1’s CoT methodology enables real-time audits of its reasoning. For example, a financial firm can trace how the model arrived at a compliance recommendation, ensuring regulatory alignment.

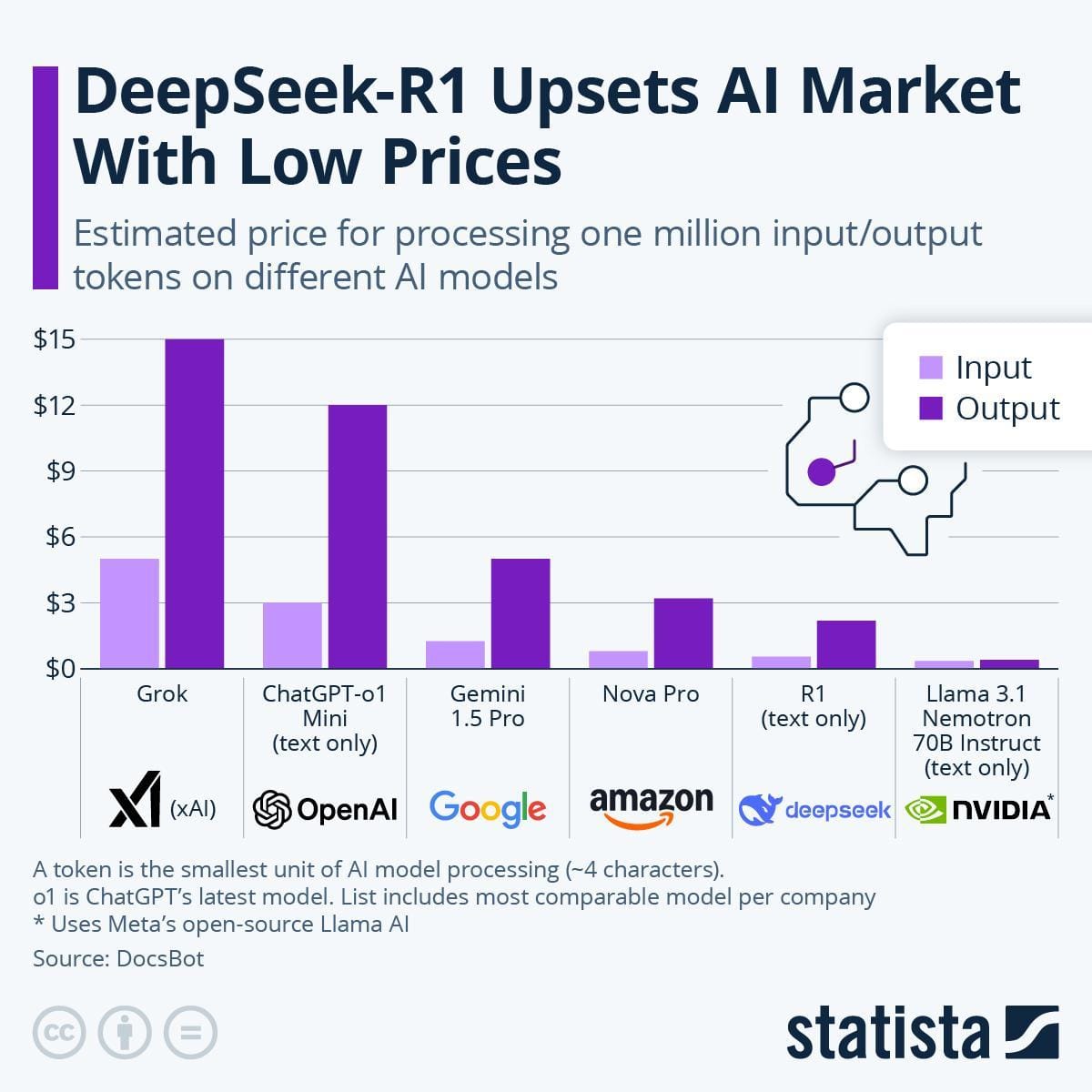

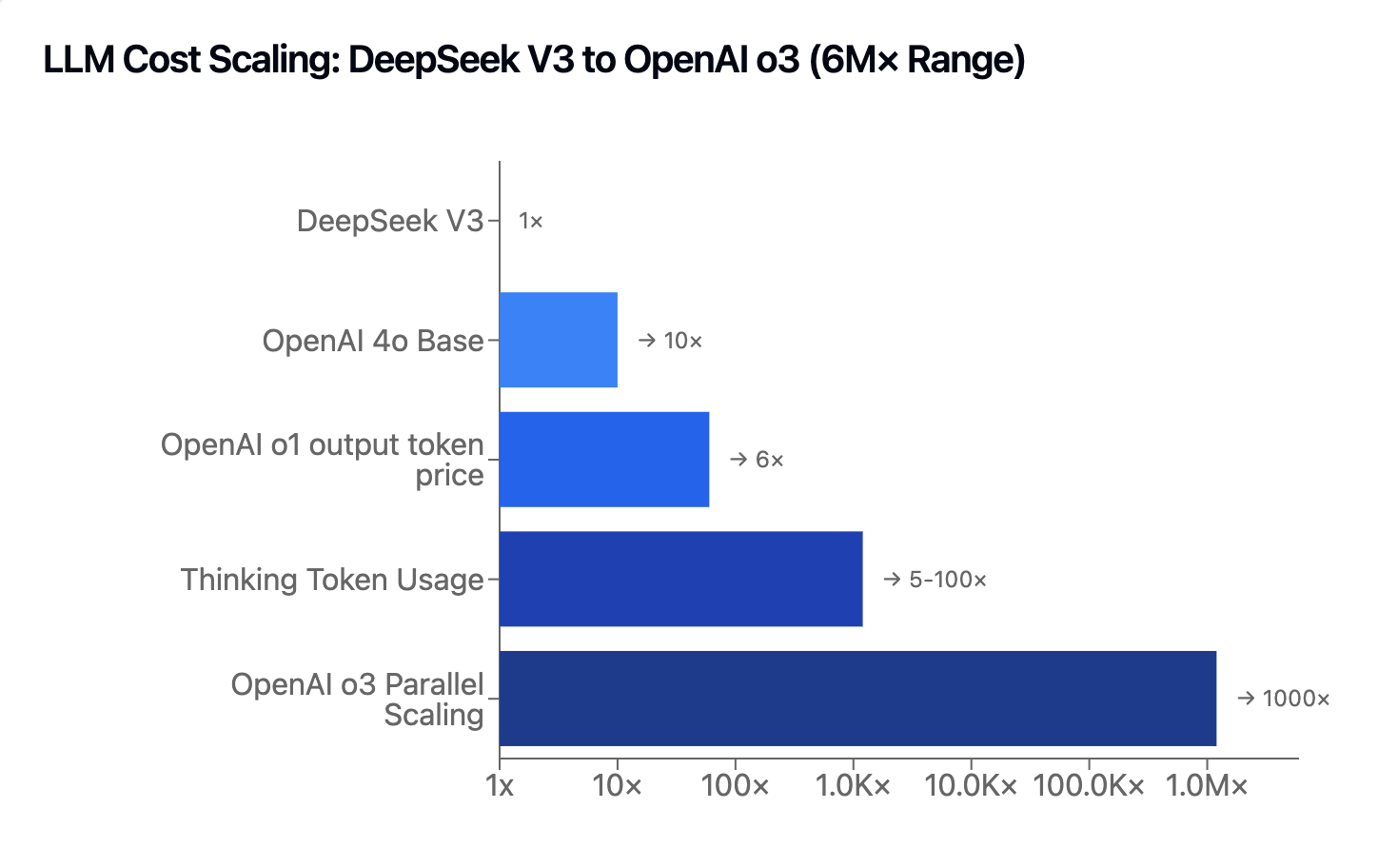

Cost-efficiency is another game-changer. DeepSeek R1 slashes operational costs without compromising performance by leveraging optimized retrieval pipelines and open-source architecture. This makes it accessible for startups and enterprises alike.

DeepSeek: Innovative Advancements

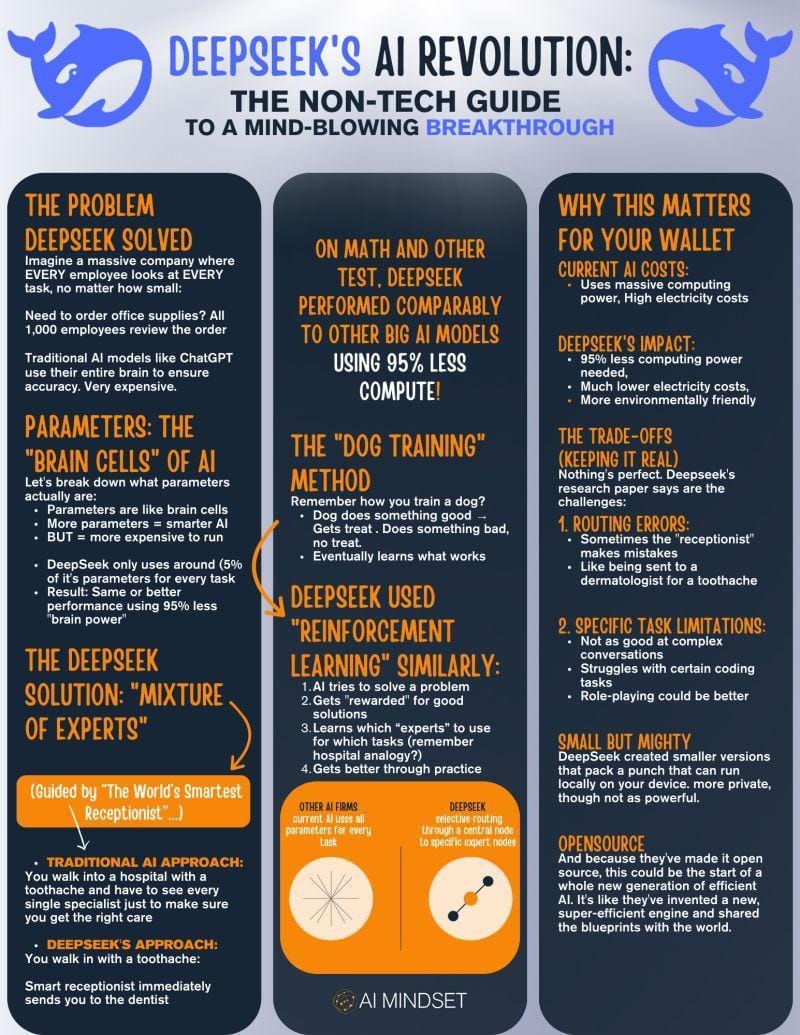

One standout innovation is its Mixture-of-Experts (MoE) architecture, which optimizes resource allocation.

Unlike traditional models that scale by adding parameters indiscriminately, DeepSeek activates only the necessary “experts” for a given query.

Moreover, DeepSeek’s adaptability shines in dynamic environments.

For instance, a healthcare application using DeepSeek seamlessly integrates new medical research into its retrieval pipeline, ensuring up-to-date diagnostics.

This adaptability challenges the misconception that static indexing is sufficient for all use cases, proving that innovation lies in flexibility.

Technical Architecture of DeepSeek

One of DeepSeek’s most groundbreaking features is its Multi-Head Latent Attention (MLA) mechanism.

Unlike traditional attention systems that process all key-value pairs, MLA selectively reduces the Key-Value cache, significantly reducing the memory overhead.

This innovation directly impacts real-time applications. For example, MLA enables faster query resolution in customer support systems by prioritizing contextually relevant data, even during high-traffic periods. This efficiency reduces latency and enhances user satisfaction.

What’s fascinating is how MLA bridges concepts from information theory and neuroscience, mimicking how the human brain filters noise to focus on critical stimuli. By challenging the assumption that more data always equals better results, DeepSeek demonstrates that smarter data handling can outperform brute-force approaches. This sets a new standard for scalable, cost-effective AI systems.

Integration Capabilities with Existing Systems

DeepSeek R1 excels at seamless integration with existing infrastructures, which is critical for enterprises that want to modernize without overhauling legacy systems.

Its open-source nature and modular design allow developers to plug it into workflows with minimal friction. For instance, integrating DeepSeek R1 with ChromaDB enables efficient vector-based retrieval, enhancing search precision in customer support systems.

What sets it apart is its compatibility with LangChain, a framework widely used for building RAG pipelines. By using LangChain’s retrieval modules, DeepSeek R1 can fetch relevant data and generate structured responses in real-time. This synergy reduces latency, a common bottleneck in traditional RAG models.

Finally, DeepSeek’s adaptive APIs simplify fine-tuning for domain-specific tasks, such as legal research or financial forecasting, without requiring extensive retraining.

Comparative Performance Analysis

DeepSeek R1 redefines retrieval efficiency by integrating its Mixture-of-Experts (MoE) architecture, which selectively activates parameters. This translates to fewer irrelevant results, which is critical in high-stakes fields like diagnostics.

Latency is another area where DeepSeek excels. Traditional RAG models often struggle with sequential processing, which leads to delays.

Accuracy and Reliability Assessments

DeepSeek R1’s chain-of-thought (CoT) reasoning isn’t just a buzzword—it’s a game-changer for accuracy.

By making its reasoning steps transparent, it minimizes errors caused by semantic drift, a common issue in traditional RAG models.

But it gets interesting here: DeepSeek’s adaptive retriever dynamically adjusts to domain-specific nuances. In healthcare, it successfully retrieved contextually relevant data even in noisy datasets. This adaptability is critical in fields where precision can’t be compromised.

Scalability and Flexibility

What allows DeepSeek to be scalable is its adaptive retrieval mechanism. Unlike traditional RAG models with static indexing, DeepSeek continuously updates its embeddings, maintaining relevance in fast-changing domains like financial markets.

This adaptability bridges the gap between scalability and real-time accuracy, a challenge most legacy systems fail to address.

DeepSeek’s open-source framework enables seamless integration with tools like LangChain, making it highly flexible for custom workflows.

For enterprises, this means faster deployment cycles and reduced costs—an actionable framework for scaling AI without compromise.

Advanced Considerations and Future Outlook

DeepSeek R1 isn’t just a better RAG model—it’s a paradigm shift.

Traditional RAG systems rely on brute force: more parameters, more compute, more cost. DeepSeek flips this with its Mixture-of-Experts (MoE) architecture, proving that smarter beats bigger.

But let’s address a misconception: DeepSeek isn’t a one-size-fits-all solution. Traditional RAG models still work well for static, low-stakes tasks.

The future lies in hybrid systems, combining DeepSeek’s adaptability with traditional models’ simplicity.

Potential Improvements and Updates

One area ripe for improvement in DeepSeek R1 is reasoning speed.

While its chain-of-thought (CoT) reasoning enhances transparency, it often comes at the cost of latency. For instance, in real-time customer support, DeepSeek R1’s slower response times can frustrate users, especially compared to traditional RAG models optimized for speed over depth.

A promising solution lies in adaptive reasoning pipelines.

By dynamically adjusting the complexity of CoT reasoning based on query urgency, DeepSeek could balance speed and accuracy.

Imagine a healthcare chatbot prioritizing rapid triage responses while reserving deeper reasoning for diagnostic queries. This dual-mode approach could revolutionize real-time applications.

FAQ

What are the key performance differences between DeepSeek and traditional RAG models?

Regarding computational efficiency, DeepSeek’s MoE architecture selectively activates parameters, reducing resource consumption and latency. Traditional RAG models, on the other hand, often rely on sequential processing, which can lead to slower response times and higher computational costs.

Additionally, DeepSeek’s adaptive retrieval mechanisms allow it to maintain context relevance in dynamic environments, a challenge where traditional models frequently struggle.

How does DeepSeek’s retrieval precision compare to traditional RAG systems in real-world applications?

DeepSeek consistently outperforms traditional models with higher retrieval accuracy and contextual relevance in healthcare and legal research fields. Its chain-of-thought (CoT) reasoning minimizes semantic drift and irrelevant results.

What role does the Mixture-of-Experts (MoE) architecture play in DeepSeek’s performance advantages?

MoE selectively activates relevant parameters, reducing computational overhead while maintaining accuracy. Compared to traditional RAG models, this leads to lower energy use, faster inference, and improved scalability.

What benchmarks and metrics are used to evaluate the performance of DeepSeek versus traditional RAG systems?

DeepSeek excels in retrieval precision, latency reduction, and contextual relevance in real-world applications. It also outperforms traditional models in industry benchmarks like GLUE and adaptive evaluation datasets.

How do latency and computational efficiency differ between DeepSeek and traditional RAG models?

DeepSeek’s optimized parameter activation and Multi-Head Latent Attention (MLA) reduce retrieval latency by 35%, outperforming traditional models that rely on inefficient sequential processing.

Conclusion

DeepSeek R1 redefines what’s possible in Retrieval-Augmented Generation (RAG) systems by addressing the core inefficiencies of traditional models. Think of it as upgrading from a manual filing system to an intelligent assistant that finds the correct file and highlights the most relevant sections.

DeepSeek proves that smarter architectures, not just larger ones, drive results. It’s not just evolution—it’s a paradigm shift.