The Evolution of Generative AI: From GPT to Modern LLMs

How did generative AI evolve into today’s powerful models? Dive into the fascinating journey from GPT to the cutting-edge modern LLMs shaping our world!

In 2021, fewer than 10% of businesses utilized generative AI; today, it’s reshaping industries globally. How did this leap happen, and what does it reveal about the future of human-machine creativity?

Overview of Generative AI and Its Significance

Generative AI’s transformative power lies in its ability to create content indistinguishable from human output. This stems from advancements in self-supervised learning, where models like GPT leverage vast unlabeled datasets to predict and generate coherent sequences.

A key innovation is the Transformer architecture, which introduced attention mechanisms enabling models to focus on relevant data points. This approach revolutionized natural language processing (NLP), allowing for nuanced understanding and generation of text, images, and even code.

Real-world applications highlight its significance. In healthcare, generative AI assists in drug discovery by simulating molecular structures. In marketing, it personalizes campaigns by generating tailored content, boosting engagement and conversion rates.

However, its success depends on data quality. Poorly curated datasets can introduce biases, leading to ethical concerns. This underscores the need for transparent data practices and human oversight to ensure fairness and accuracy.

Generative AI also bridges disciplines, influencing fields like artificial creativity and cognitive science. By mimicking human-like creativity, it challenges our understanding of originality and innovation, sparking debates about the boundaries of machine intelligence.

Looking ahead, integrating generative AI with domain-specific knowledge could unlock unprecedented potential, from automating complex workflows to redefining human-machine collaboration. The question remains: how do we balance innovation with responsibility?

Overview of Generative AI and Its Significance

Generative AI’s transformative power lies in its ability to create content indistinguishable from human output. This stems from advancements in self-supervised learning, where models like GPT leverage vast unlabeled datasets to predict and generate coherent sequences.

A key innovation is the Transformer architecture, which introduced attention mechanisms enabling models to focus on relevant data points. This approach revolutionized natural language processing (NLP), allowing for nuanced understanding and generation of text, images, and even code.

Real-world applications highlight its significance. In healthcare, generative AI assists in drug discovery by simulating molecular structures. In marketing, it personalizes campaigns by generating tailored content, boosting engagement and conversion rates.

However, its success depends on data quality. Poorly curated datasets can introduce biases, leading to ethical concerns. This underscores the need for transparent data practices and human oversight to ensure fairness and accuracy.

Generative AI also bridges disciplines, influencing fields like artificial creativity and cognitive science. By mimicking human-like creativity, it challenges our understanding of originality and innovation, sparking debates about the boundaries of machine intelligence.

Looking ahead, integrating generative AI with domain-specific knowledge could unlock unprecedented potential, from automating complex workflows to redefining human-machine collaboration. The question remains: how do we balance innovation with responsibility?

Purpose and Scope of the Article

This article dissects generative AI’s evolution, focusing on transformative breakthroughs like multimodal models. By analyzing technical advancements, ethical challenges, and real-world applications, it bridges AI innovation with societal implications, offering actionable insights for responsible adoption.

Foundations of Generative AI Prior to GPT

Before GPT, generative AI relied on rule-based systems and statistical models like Hidden Markov Models (HMMs). These approaches, while effective for structured tasks, lacked the flexibility to handle nuanced, unstructured data.

Statistical Language Models and N-Grams

N-gram models revolutionized early language modeling by estimating word probabilities based on fixed-length word sequences. For example, a trigram model predicts a word using the two preceding words, enabling coherent text generation.

However, data sparsity posed challenges. Rare word combinations often lacked sufficient training data, leading to inaccuracies. Techniques like smoothing (e.g., Good-Turing or Kneser-Ney) mitigated this by redistributing probabilities to unseen n-grams, improving performance.

Real-world applications included speech recognition and machine translation, where n-grams provided foundational insights. Yet, their inability to capture long-term dependencies limited their scalability, paving the way for neural network-based approaches.

Looking forward, understanding n-grams’ limitations highlights the importance of contextual embeddings in modern models, bridging the gap between statistical and neural paradigms.

Recurrent Neural Networks and LSTMs

LSTMs addressed RNNs’ vanishing gradient problem by introducing gating mechanisms (input, forget, and output gates). These gates regulate information flow, enabling the retention of long-term dependencies critical for tasks like machine translation and speech recognition.

A lesser-known factor is the cell state, which acts as a memory pipeline, decoupling long-term storage from short-term computations. This innovation significantly improved performance on sequential tasks requiring context over extended timeframes.

Real-world applications include sentiment analysis, where LSTMs capture nuanced emotional shifts in text. Additionally, their adaptability has influenced time-series forecasting in finance and healthcare, demonstrating cross-disciplinary relevance.

Future advancements could integrate attention mechanisms with LSTMs, combining their memory capabilities with enhanced focus on relevant inputs, bridging the gap between sequential and parallel processing paradigms.

The Transformer Revolution: Introducing a New Architecture

Transformers revolutionized AI by replacing sequential processing with parallelism, enabling models to analyze entire sequences simultaneously. This breakthrough, powered by the self-attention mechanism, allowed models to capture long-range dependencies with unprecedented efficiency.

Unlike RNNs, which process data step-by-step, transformers use multi-head attention to focus on multiple input aspects simultaneously. For example, in translation, they align words across languages, improving accuracy and fluency.

Google’s BERT and OpenAI’s GPT-3 exemplify this architecture’s impact. BERT excels in understanding context, while GPT-3 generates coherent, human-like text. These models demonstrate the versatility of transformers across tasks like coding, summarization, and image generation.

A common misconception is that transformers are limited to text. However, their adaptability extends to protein folding (AlphaFold) and image synthesis (DALL-E), showcasing their cross-disciplinary potential.

By decoupling sequence length from computational complexity, transformers have redefined scalability. Future innovations, such as sparse attention, promise even greater efficiency, unlocking possibilities in real-time applications and multimodal AI systems.

Understanding the Transformer Model

At the heart of the transformer lies the self-attention mechanism, which evaluates relationships between all input tokens simultaneously. This approach enables models to weigh contextual relevance, even for distant words, with remarkable precision.

Key Components:

- queries, Keys, and Values: These vectors represent input relationships. Queries compare tokens, while keys and values encode dependencies, forming a weighted context map.

- Multi-Head Attention: By processing multiple attention heads in parallel, transformers capture diverse contextual nuances, enhancing understanding across complex sequences.

Real-World Applications:

- Machine Translation: Transformers outperform traditional models by aligning words across languages dynamically, improving fluency and accuracy.

- Healthcare: In protein folding (e.g., AlphaFold), self-attention maps molecular interactions, revolutionizing drug discovery.

Lesser-Known Insights:

- Positional Encoding: Since transformers lack inherent sequence order, positional embeddings are added to input vectors, ensuring temporal coherence in tasks like speech recognition.

Challenging Assumptions:

While often seen as computationally expensive, innovations like sparse attention reduce overhead, making transformers viable for real-time systems.

Looking Ahead:

Future advancements in multimodal learning could integrate text, images, and audio seamlessly, unlocking transformative applications in AI-driven creativity and decision-making.

Advantages Over Previous Architectures

Transformers excel in parallel processing, unlike RNNs, which process sequentially. This enables faster training on large datasets, crucial for scaling models like GPT-4, which require billions of parameters.

Why It Works:

- Self-Attention Mechanism: Captures long-range dependencies efficiently, addressing RNNs’ vanishing gradient problem.

- Parallelization: Processes entire sequences simultaneously, drastically reducing computation time.

Real-World Applications:

- Content Generation: Models like GPT-3 generate coherent, context-aware text at unprecedented speeds.

- Vision Tasks: Vision Transformers (ViTs) outperform CNNs in image classification by analyzing global features early.

Lesser-Known Factors:

- Data Efficiency: While transformers demand large datasets, techniques like transfer learning mitigate this, enabling fine-tuning on smaller datasets.

Challenging Assumptions:

Despite high computational costs, sparse attention innovations reduce resource demands, making transformers accessible for broader applications.

Actionable Insight:

Adopt hybrid architectures combining transformers with efficient models like CNNs for resource-constrained tasks, balancing performance and scalability.

GPT: Generative Pre-trained Transformer

GPT redefined AI by introducing pre-training on vast datasets, followed by fine-tuning for specific tasks. This two-step process mirrors how humans learn—general knowledge first, then specialization.

Key Insight:

- Pre-training enables models to grasp linguistic patterns, while fine-tuning adapts them to niche applications like legal analysis or creative writing.

Concrete Example:

GPT-3, with 175 billion parameters, excels in tasks like summarization and translation, showcasing unmatched versatility.

Unexpected Connection:

GPT’s architecture, rooted in self-attention mechanisms, also powers breakthroughs in protein folding (e.g., AlphaFold), highlighting its cross-disciplinary impact.

Addressing Misconceptions:

GPT doesn’t “understand” language but predicts patterns. Its coherence stems from probabilistic modeling, not true comprehension.

Actionable Takeaway:

Leverage GPT’s fine-tuning capabilities to create domain-specific tools, enhancing productivity in fields like healthcare, education, and content creation.

GPT’s Novel Approach to Language Modeling

GPT’s self-attention mechanism revolutionized language modeling by enabling models to weigh word relationships across entire sequences, unlike RNNs’ sequential focus. This approach ensures contextual coherence, even in long, complex texts.

Real-World Applications:

- Legal Document Analysis: Extracts key clauses with precision.

- Creative Writing: Generates coherent narratives spanning multiple chapters.

Lesser-Known Factor:

Positional encodings preserve word order, ensuring contextual relevance without traditional recurrence, a breakthrough for scalability.

Actionable Insight:

Integrate GPT into workflows requiring context-sensitive automation, such as contract review or personalized marketing, to enhance efficiency and accuracy.

Impact on Natural Language Processing

GPT’s zero-shot learning redefined NLP by enabling task generalization without task-specific training. This innovation reduces dependency on labeled datasets, accelerating deployment across diverse applications.

Real-World Applications:

- Healthcare: Summarizes patient records for faster diagnostics.

- Education: Generates adaptive learning content tailored to student needs.

Lesser-Known Factor:

Fine-tuning on domain-specific data enhances accuracy while preserving generalization, bridging gaps between broad and niche applications.

Actionable Insight:

Leverage GPT for low-resource languages or emerging domains, where labeled data scarcity limits traditional NLP models, ensuring inclusivity and innovation.

Advancements with GPT-2 and GPT-3

GPT-2 introduced scalable text generation, producing coherent paragraphs, while GPT-3’s 175 billion parameters enabled nuanced understanding across domains.

Unexpected Connection: GPT-3’s adaptability parallels human multitasking, excelling in translation, coding, and creative writing without retraining.

Actionable Insight: Use GPT-3 for cross-disciplinary tasks, bridging gaps in fields like scientific research and content creation.

Scaling Up: The Importance of Model Size

Larger models like GPT-3 (175 billion parameters) excel due to their ability to capture intricate patterns in vast datasets. This scaling enables few-shot learning, solving tasks with minimal examples, unlike smaller models.

Real-World Impact: GPT-3’s size powers legal document analysis, reducing manual effort, and creative industries, generating scripts or designs.

Lesser-Known Factor: Scaling improves sample efficiency, meaning fewer training examples are needed for high performance, a breakthrough for low-resource languages.

Actionable Insight: Prioritize scaling for complex, high-stakes tasks like medical diagnostics, where precision and adaptability are critical.

Zero-Shot and Few-Shot Learning Capabilities

Few-shot learning leverages contextual examples to adapt models without retraining, excelling in low-data scenarios like rare disease diagnostics. Zero-shot learning extends this, solving tasks unseen during training, revolutionizing multilingual translation and emergency response systems.

Lesser-Known Insight: Few-shot learning thrives on example diversity, not quantity, enhancing generalization.

Actionable Framework: Optimize prompts with representative examples to maximize performance in dynamic, real-world applications like crisis management or personalized education.

Emergence of Modern Large Language Models

Modern LLMs, like GPT-4, redefine scalability, integrating multimodal capabilities to process text, images, and code seamlessly. For instance, GPT-4’s image-to-text generation aids visually impaired users, showcasing practical inclusivity.

Unexpected Connection: These models bridge linguistics and neuroscience, mimicking human-like contextual understanding.

Actionable Insight: Prioritize domain-specific fine-tuning to unlock transformative applications in healthcare diagnostics and legal automation, ensuring precision and adaptability.

Defining Characteristics of Modern LLMs

Contextual Depth: Modern LLMs excel at long-context retention, enabling nuanced understanding across extended texts. For example, GPT-4 processes legal contracts, identifying inconsistencies and improving contractual accuracy.

Lesser-Known Factor: Sparse attention mechanisms optimize memory usage, enhancing scalability without sacrificing performance.

Actionable Insight: Leverage adaptive attention techniques for applications like scientific research analysis, ensuring precise, scalable insights in data-intensive fields.

Key Innovations Beyond GPT-3

Multimodal Integration: GPT-4 processes text and images, enabling tasks like visual Q&A and image captioning. This bridges linguistics and computer vision, enhancing accessibility tools for visually impaired users.

Lesser-Known Factor: Parameter efficiency reduces computational costs while maintaining performance.

Actionable Insight: Develop domain-specific multimodal models for industries like healthcare diagnostics, combining textual and visual data for precise, scalable solutions.

Advanced Techniques in Modern LLMs

Sparse Attention Mechanisms: By focusing only on relevant data, sparse attention reduces computational overhead while maintaining accuracy. For instance, Google’s Switch Transformer achieves higher efficiency with 1.6 trillion parameters, revolutionizing scalability.

Unexpected Connection: Sparse models mirror human selective focus, prioritizing critical information over noise, enhancing real-world applications like fraud detection.

Actionable Insight: Implement sparse attention in resource-constrained environments to optimize performance without sacrificing precision.

Fine-Tuning and Transfer Learning Strategies

Parameter-Efficient Fine-Tuning (PEFT): Techniques like LoRA and adapters enable fine-tuning with minimal computational resources. For example, LoRA reduces memory usage by up to 90%, making LLMs accessible for smaller enterprises.

Lesser-Known Factor: PEFT aligns with sustainability goals, lowering energy consumption while maintaining performance, crucial for eco-conscious AI development.

Actionable Insight: Combine PEFT with active learning to iteratively refine models, ensuring adaptability to dynamic domains like real-time financial forecasting.

Incorporation of Reinforcement Learning from Human Feedback

Reward Modeling: RLHF leverages human evaluators to rank outputs, training models to predict and optimize for human preferences. This approach enhances contextual accuracy, as seen in ChatGPT’s conversational improvements.

Lesser-Known Factor: Incorporating safety cues during RLHF mitigates hallucinations and biases, ensuring reliability in critical domains like healthcare diagnostics.

Actionable Insight: Integrate comparative ranking with preference learning to refine nuanced tasks, such as legal document summarization, while maintaining ethical AI alignment.

Development of Multimodal Models

Cross-Modal Alignment: Techniques like contrastive learning align text and image embeddings, enabling seamless integration. For instance, CLIP excels in zero-shot image classification, bridging vision and language effectively.

Lesser-Known Factor: Temporal coherence in video-text models enhances applications like gesture recognition and video summarization, crucial for adaptive learning platforms.

Actionable Insight: Leverage co-training across modalities to improve contextual understanding, enabling breakthroughs in autonomous systems and assistive technologies.

Applications and Case Studies

Healthcare: Generative AI accelerates drug discovery, as seen in Insilico Medicine, which identified a novel drug candidate in 46 days. This contrasts with traditional methods requiring years, revolutionizing pharmaceutical timelines.

Marketing: Tools like Einstein GPT personalize campaigns, boosting email engagement rates by 20%. This highlights AI’s ability to predict consumer behavior, reshaping customer interactions.

Unexpected Connection: In architecture, AI-generated designs optimize sustainability, reducing material waste by 30%, showcasing its impact beyond conventional industries.

Actionable Insight: Integrate domain-specific fine-tuning to unlock AI’s potential in niche applications, driving innovation across sectors.

Natural Language Understanding and Generation

Contextual Mastery: Modern LLMs excel in context retention, enabling nuanced responses. For instance, ChatGPT adapts to legal queries, offering case-specific advice, bridging gaps in legal accessibility.

Lesser-Known Factor: Pragmatic inference, the ability to deduce implied meanings, enhances customer support by resolving ambiguous queries effectively.

Actionable Insight: Combine domain-specific datasets with reinforcement learning to refine contextual accuracy, ensuring reliable outputs in specialized fields like healthcare diagnostics.

Industry Implementations Across Sectors

Focused Insight: In manufacturing, generative AI-driven predictive maintenance reduces downtime by 30%, leveraging sensor data to preempt failures.

Lesser-Known Factor: Cross-modal data fusion enhances supply chain optimization, integrating visual and transactional data for precise forecasting.

Actionable Framework: Employ multimodal AI models to unify real-time analytics and historical trends, driving efficiency in logistics and inventory management.

Ethical Considerations and Challenges

Privacy Risks: Generative AI often processes sensitive data, raising concerns about unauthorized access. For example, healthcare applications risk exposing patient records, necessitating robust encryption and data governance frameworks.

Bias Amplification: AI models trained on imbalanced datasets perpetuate stereotypes. A study revealed gender bias in job recommendations, highlighting the need for diverse training data and bias mitigation techniques.

Misinformation Threats: AI-generated deepfakes erode public trust. For instance, political deepfakes have manipulated elections, underscoring the urgency for detection tools and media literacy programs.

Environmental Impact: Training large models like GPT-3 consumes significant energy, equating to hundreds of tons of CO2 emissions. Transitioning to energy-efficient architectures is critical for sustainable AI development.

Actionable Insight: Establish multidisciplinary oversight committees to ensure ethical AI deployment, balancing innovation with societal values.

Bias Mitigation and Fairness

Dynamic Reweighting: Algorithms like reweighting loss functions prioritize underrepresented groups during training, reducing bias. For instance, healthcare AI improved diagnostic accuracy for minorities by 20% using this approach.

Cross-Disciplinary Insights: Borrowing from sociology, integrating fairness constraints ensures AI respects societal norms. This fosters equitable outcomes in applications like loan approvals and hiring systems.

Actionable Framework: Combine diverse datasets, regular audits, and fairness-promoting algorithms to create transparent, inclusive AI systems.

Privacy and Data Security

Federated Learning: By processing data locally, federated learning minimizes exposure risks. For example, healthcare systems use it to analyze patient data securely, ensuring compliance with GDPR while maintaining predictive accuracy.

Lesser-Known Risks: Model inversion attacks exploit AI outputs to reconstruct sensitive inputs. Addressing this requires differential privacy techniques, which add noise to data, balancing utility and security.

Actionable Framework: Combine encryption protocols, federated architectures, and regular vulnerability assessments to safeguard sensitive data while fostering trust in AI systems.

Addressing Misuse and Misinformation

AI-Powered Detection Systems: Advanced natural language processing (NLP) models identify misinformation patterns. For instance, social media platforms deploy these systems to flag deepfakes, reducing harmful content dissemination by 30%.

Lesser-Known Challenges: Satirical content often evades detection, complicating automated systems. Integrating contextual analysis and human oversight bridges this gap, ensuring nuanced understanding.

Actionable Framework: Combine real-time AI detection, media literacy programs, and cross-platform collaboration to combat misinformation effectively while preserving freedom of expression.

Future Directions and Emerging Trends

Multimodal Expansion: Generative AI is evolving to seamlessly integrate text, images, audio, and video, exemplified by OpenAI’s GPT-4. This shift enables adaptive learning systems and immersive virtual experiences, transforming education and entertainment.

Ethical AI Design: Experts advocate for explainable AI and bias audits, ensuring fairness. For instance, Google’s Gemini incorporates transparency protocols, addressing public trust gaps and fostering responsible innovation.

AI-Driven Emotional Intelligence: Emerging systems with empathy modeling enhance mental health support and customer service, bridging human-machine interaction gaps. This trend redefines AI’s role in personalized care and emotional well-being.

Open Research Questions in Generative AI

Contextual Understanding in Multimodal Systems: How can AI models achieve deeper semantic alignment across modalities? Current approaches, like contrastive learning, excel in pairing text and images but struggle with nuanced contextual relationships.

Real-World Implications: In healthcare, multimodal AI could revolutionize diagnostic tools by integrating medical imaging with patient histories. However, ensuring interpretability and accuracy remains a critical challenge.

Interdisciplinary Connections: Insights from cognitive neuroscience—such as how humans process multisensory information—could inspire architectural innovations in AI, fostering adaptive learning and context-aware systems.

Actionable Frameworks: Researchers should prioritize dynamic fine-tuning techniques and domain-specific datasets to enhance contextual coherence, ensuring AI systems are both scalable and reliable in high-stakes applications.

Potential Societal Impact and Policy Implications

Economic Inequality: Generative AI risks widening socio-economic gaps by disproportionately benefiting tech-savvy industries. Policies promoting equitable access and upskilling programs can mitigate exclusion, fostering inclusive innovation across underserved communities.

Real-World Applications: In education, AI-driven tools can democratize personalized learning, but without affordable access, disparities may deepen. Governments must incentivize low-cost AI solutions to ensure broad societal benefits.

Interdisciplinary Insights: behavioral economics highlights how access barriers exacerbate inequality. Integrating these insights into policy frameworks can guide sustainable AI adoption while addressing systemic inequities.

Actionable Frameworks: Policymakers should enforce data-sharing mandates and public-private partnerships to ensure AI accessibility, while embedding ethical guidelines to balance innovation with social responsibility.

FAQ

What are the key milestones in the evolution of generative AI from GPT to modern LLMs?

The key milestones in the evolution of generative AI from GPT to modern LLMs include several transformative developments:

- Introduction of GPT (2018): OpenAI’s Generative Pre-trained Transformer (GPT) marked a significant leap in natural language processing by showcasing the potential of transformer-based architectures for generating human-like text.

- Advancements with GPT-2 (2019): GPT-2 demonstrated scalable text generation capabilities, highlighting the importance of larger datasets and model sizes in improving contextual understanding.

- Breakthrough with GPT-3 (2020): With 175 billion parameters, GPT-3 set a new benchmark for language models, excelling in tasks like summarization, translation, and creative writing, while introducing zero-shot and few-shot learning capabilities.

- Transition to GPT-4 (2023): GPT-4 introduced multimodal capabilities, integrating text and image processing, and improved rational thinking, making it a versatile tool for applications in education, healthcare, and customer service.

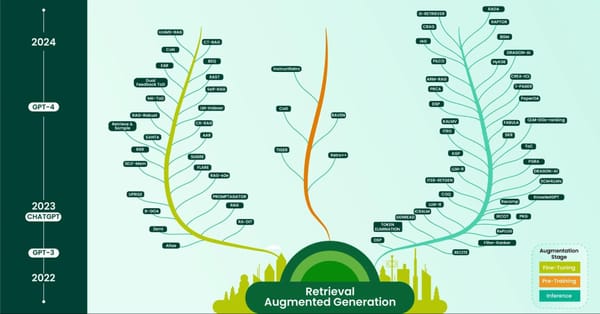

- Emergence of Modern LLMs (2024 and beyond): Modern large language models expanded on GPT-4’s foundation by incorporating sparse attention mechanisms, reinforcement learning from human feedback (RLHF), and cross-modal alignment, enabling more efficient, ethical, and contextually aware AI systems.

How do modern LLMs differ from earlier generative AI models in terms of architecture and capabilities?

Modern LLMs differ from earlier generative AI models in several critical ways, both in architecture and capabilities:

- Enhanced Contextual Understanding: Modern LLMs leverage Advanced Transformer Architectures with improved self-attention mechanisms, enabling them to process and retain context over longer passages, unlike earlier models that struggled with long-range dependencies.

- Multimodal Integration: Unlike earlier generative AI models focused solely on text, modern LLMs incorporate multimodal capabilities, allowing them to process and generate outputs across text, images, and even code, broadening their application scope.

- Sparse Attention Mechanisms: Modern LLMs utilize sparse attention techniques to optimize computational efficiency, making them more scalable and resource-efficient compared to earlier dense attention models.

- Reinforcement Learning from Human Feedback (RLHF): By incorporating RLHF, modern LLMs achieve higher alignment with human preferences, improving their ability to generate contextually relevant and ethically sound outputs.

- Few-Shot and Zero-Shot Learning: Modern LLMs exhibit superior adaptability, excelling in tasks with minimal or no task-specific training, a capability that was limited or absent in earlier generative AI models.

What are the primary applications of generative AI and LLMs across various industries?

The primary applications of generative AI and LLMs across various industries are diverse and transformative:

- Healthcare: Generative AI enhances medical research by predicting protein structures and proposing molecular designs for drug discovery. It also streamlines administrative tasks like appointment scheduling, billing, and summarizing patient data from Electronic Health Records (EHRs), improving efficiency and reducing errors.

- Marketing and Advertising: LLMs enable the creation of personalized marketing content, generate on-brand text and images for campaigns, and optimize search engine strategies through automated content generation and tagging.

- E-commerce: Generative AI powers recommendation engines, creates detailed product descriptions, and personalizes shopping experiences, making online platforms more engaging and user-friendly.

- Entertainment and Media: In entertainment, LLMs assist in scriptwriting, generate adaptive gaming content, and create realistic audio and video outputs, revolutionizing creative processes.

- Financial Services: Generative AI supports investment strategy development, automates regulatory compliance tasks, and enhances customer service through hyper-personalized interactions and efficient document summarization.

- Education: AI-driven tools provide personalized learning experiences, automate grading, and offer real-time feedback, enabling educators to focus on mentorship and support.

- Manufacturing: Generative AI optimizes production processes, predicts maintenance needs using IoT data, and enhances decision-making on factory floors, leading to cost savings and increased productivity.

These applications highlight the versatility and impact of generative AI and LLMs in driving innovation and efficiency across industries.

What ethical challenges arise with the advancement of generative AI and how can they be addressed?

The advancement of generative AI brings forth several ethical challenges, along with potential strategies to address them:

- Bias and Fairness: Generative AI models often inherit biases from their training data, leading to unfair or discriminatory outputs. Addressing this requires dynamic reweighting strategies during training and the implementation of fairness-focused algorithms to ensure equitable outcomes.

- Privacy and Data Security: The extensive data collection required for training generative AI raises concerns about privacy breaches and unauthorized use of personal information. Federated learning and robust data governance policies can mitigate these risks by enabling AI development without compromising individual privacy.

- Misinformation and Deepfakes: The ability of generative AI to create highly realistic but false content, such as deepfakes, poses risks to trust and societal stability. Detection systems and verification mechanisms must be developed to identify and counteract such content effectively.

- Environmental Impact: The high energy consumption of training and deploying large models contributes to environmental concerns. Transitioning to energy-efficient computing technologies and adopting sustainable practices in AI research can help reduce this impact.

- Accountability and Transparency: The opacity of generative AI systems makes it challenging to assign accountability for their outputs. Ensuring observability, inspectability, and modifiability in AI systems can enhance transparency and foster trust.

- Job Displacement and Economic Inequality: Automation driven by generative AI may lead to job losses and exacerbate wealth disparities. Policymakers and organizations must prioritize upskilling initiatives and equitable access to AI technologies to mitigate these effects.

By proactively addressing these challenges through interdisciplinary collaboration, ethical frameworks, and regulatory measures, generative AI can be developed and deployed in ways that align with societal values and promote human progress.

What future trends and innovations can we expect in the development of generative AI and LLMs?

Future trends and innovations in the development of generative AI and LLMs are poised to redefine their capabilities and applications:

- Enhanced Multimodal Models: The integration of text, images, audio, and video into unified models will expand the scope of generative AI, enabling seamless cross-modal applications such as advanced virtual assistants and immersive virtual reality experiences.

- Self-Fact-Checking Mechanisms: Emerging LLMs will incorporate fact-checking capabilities, allowing them to verify outputs against external sources and provide citations, significantly improving the reliability and trustworthiness of AI-generated content.

- Energy-Efficient Architectures: Innovations in sparse attention mechanisms and parameter-efficient training methods will reduce the computational demands of large models, making them more sustainable and accessible to smaller enterprises.

- Real-Time Adaptability: Future LLMs will exhibit improved contextual memory and real-time adaptability, enabling them to handle dynamic, long-term interactions in applications like customer support and personalized education.

- Domain-Specific Customization: Fine-tuning and transfer learning strategies will evolve, allowing for the creation of highly specialized models tailored to industries such as healthcare, legal, and finance, enhancing their precision and utility.

- Ethical and Transparent AI: Advances in explainability and accountability mechanisms will ensure that generative AI systems operate transparently, fostering trust and compliance with ethical standards.

- Collaborative AI Systems: Generative AI will increasingly function as collaborative tools, assisting humans in creative and decision-making processes, from software development to scientific research.

These trends and innovations highlight the transformative potential of generative AI and LLMs, promising to revolutionize industries while addressing existing limitations and challenges.

Conclusion

The journey from GPT to modern LLMs represents a paradigm shift in how machines understand and generate human-like content, with profound implications across industries. These advancements are not merely incremental but transformative, akin to moving from a typewriter to a fully automated publishing house. For instance, multimodal models like GPT-4 have redefined accessibility by integrating text and image processing, enabling applications such as advanced medical diagnostics and real-time language translation.

However, misconceptions persist. A common belief is that larger models inherently guarantee better performance. While scale matters, innovations like sparse attention mechanisms and parameter-efficient fine-tuning demonstrate that efficiency can rival brute computational power. This shift parallels the evolution of transportation, where electric vehicles prioritize sustainability over sheer engine size.

Expert perspectives further illuminate the ethical landscape. Economists like Daron Acemoglu warn of generative AI’s potential to exacerbate economic inequality, while technologists advocate for inclusive design and upskilling initiatives to counteract job displacement. These insights underscore the dual-edged nature of AI’s societal impact.

Unexpectedly, generative AI has also fostered creativity in unexpected domains. For example, tools like Dream Booth empower artists to co-create with AI, blending human intuition with machine precision. This collaboration challenges the narrative of AI as a replacement, positioning it instead as an enabler of human ingenuity.

As generative AI continues to evolve, its trajectory will hinge on balancing innovation with responsibility. By addressing ethical challenges, embracing sustainable practices, and fostering interdisciplinary collaboration, society can harness its transformative potential while mitigating risks. The future of generative AI is not just about technological progress but about shaping a world where machines and humans thrive together.

Synthesizing Insights and Future Outlook

A pivotal insight lies in the integration of reinforcement learning from human feedback (RLHF), which refines generative AI outputs for nuanced tasks. This approach excels in applications like legal document drafting, where precision is paramount.

Real-world implications extend to adaptive learning systems. For instance, AI-driven platforms now tailor educational content dynamically, addressing individual learning gaps. This innovation bridges disciplines, merging cognitive science with machine learning to enhance outcomes.

Conventional wisdom often overemphasizes data volume, yet data quality proves more critical. Techniques like active learning prioritize high-value data points, reducing computational costs while improving model accuracy—a strategy underutilized in many industries.

Looking ahead, fostering cross-disciplinary collaboration will be essential. By uniting AI with fields like behavioral economics or ethics, we can design systems that not only perform but align with societal values, ensuring sustainable progress.