How RAG is Shaping the Future of Conversational AI Systems

Ever wondered how chatbots are getting smarter? Discover how RAG is shaping the future of conversational AI systems with advanced capabilities!

In a world where AI systems are expected to understand us better than ever, did you know that traditional conversational AI often fails when faced with unexpected queries? As businesses demand smarter, more adaptive solutions, Retrieval-Augmented Generation (RAG) emerges as a game-changer, bridging the gap between static knowledge and dynamic, real-time insights. But how does RAG truly transform human-machine interactions, and what does this mean for the future of conversational AI? The answers could redefine not just technology, but the very way we communicate.

The Evolution of Conversational AI

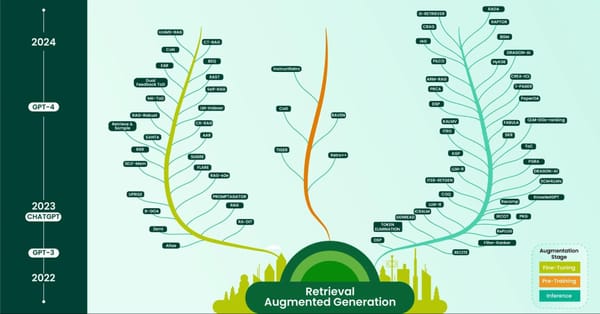

The journey of conversational AI has been marked by a shift from rule-based systems to context-aware architectures. Early chatbots like ELIZA relied on predefined scripts, limiting their ability to handle nuanced or unexpected queries. However, the integration of Natural Language Processing (NLP) and machine learning has revolutionized these systems, enabling them to interpret intent and generate human-like responses.

One pivotal advancement is the adoption of transformer-based models, such as GPT, which leverage vast datasets and self-attention mechanisms to understand context deeply. These models excel in generating coherent, contextually relevant responses, making them indispensable in applications like customer service, healthcare triaging, and personalized education.

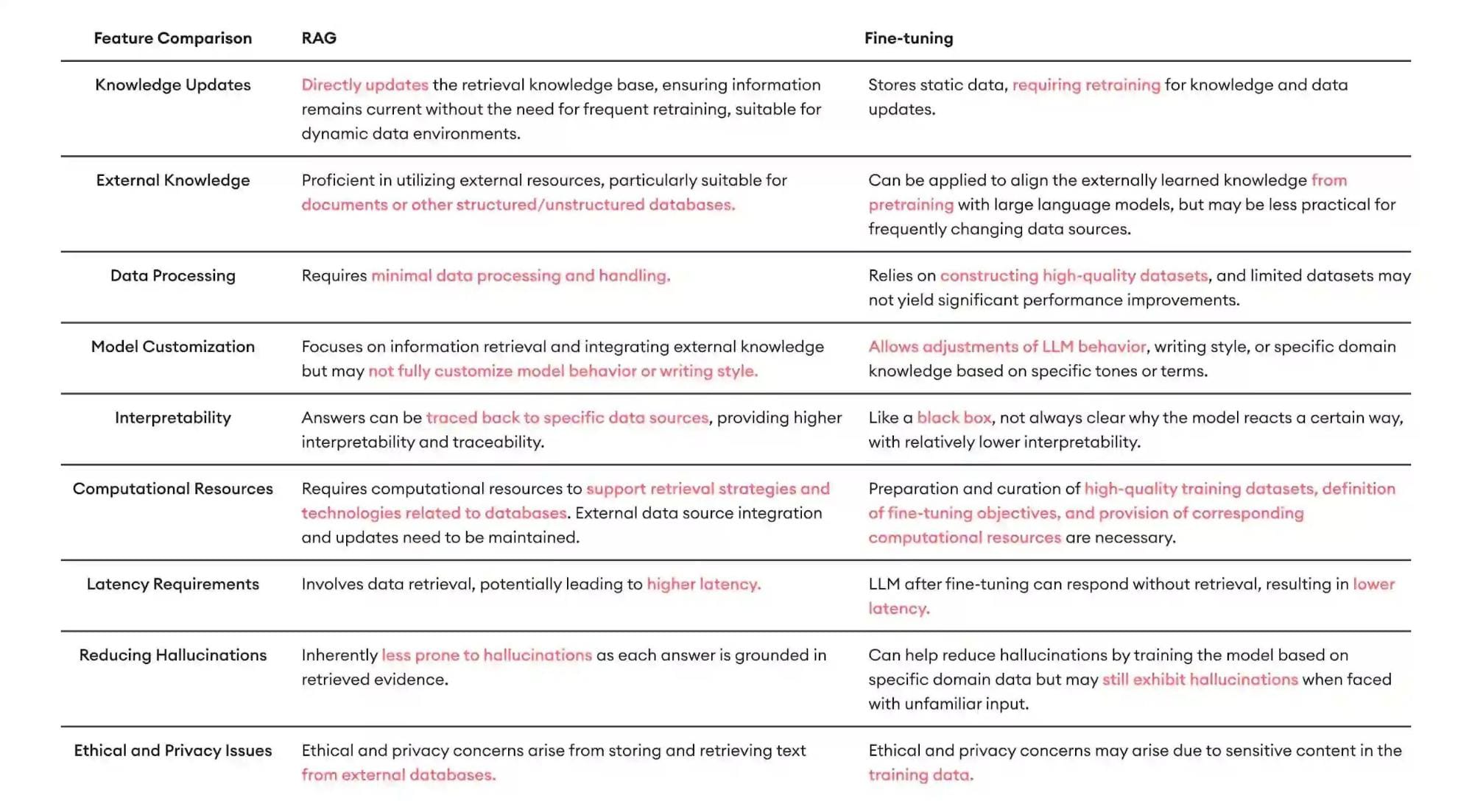

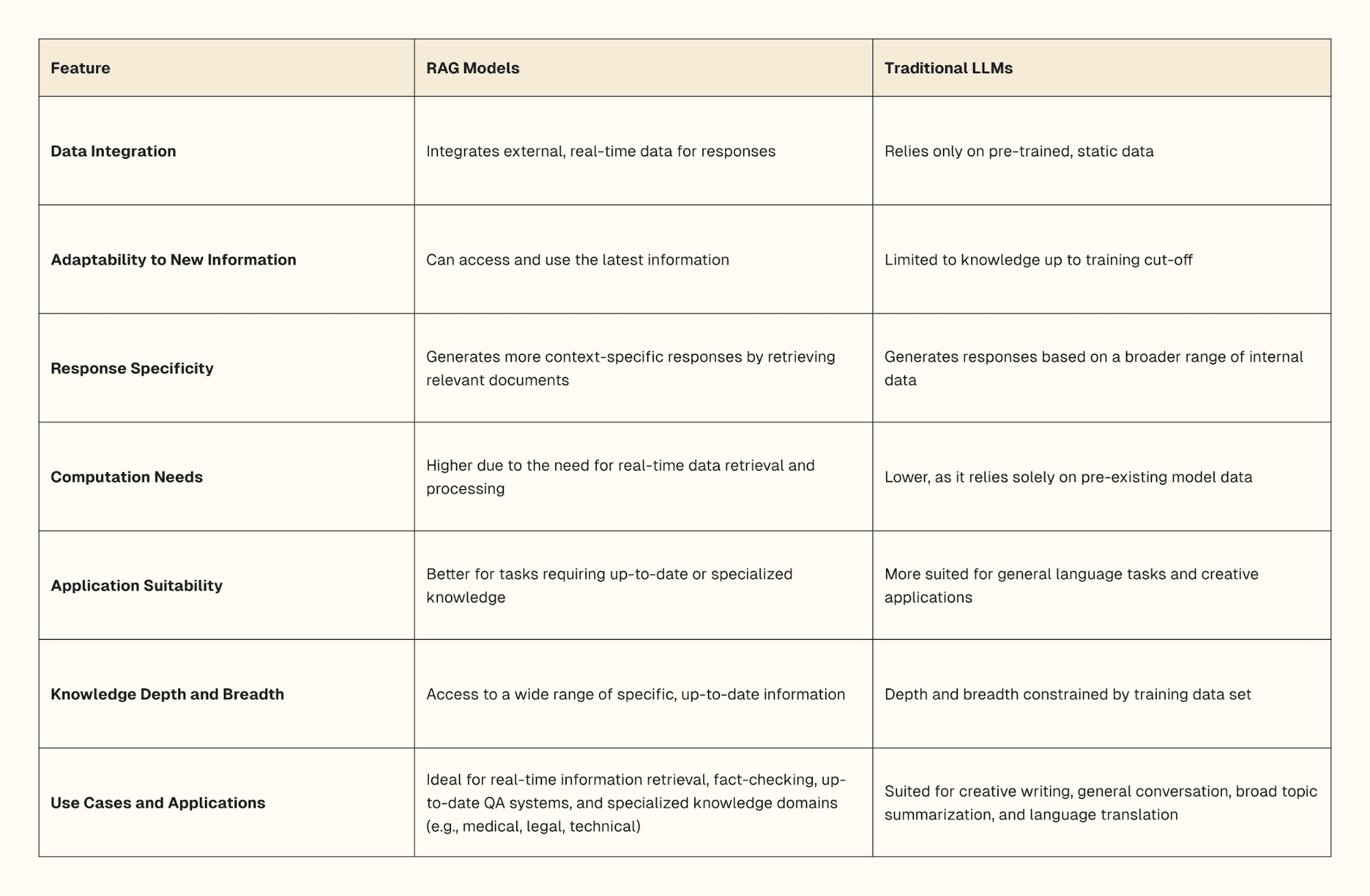

Yet, challenges persist. For instance, bias in training data can lead to skewed outputs, while the lack of real-time adaptability limits their effectiveness in dynamic environments. This is where Retrieval-Augmented Generation (RAG) steps in, combining static knowledge bases with real-time retrieval to address these gaps.

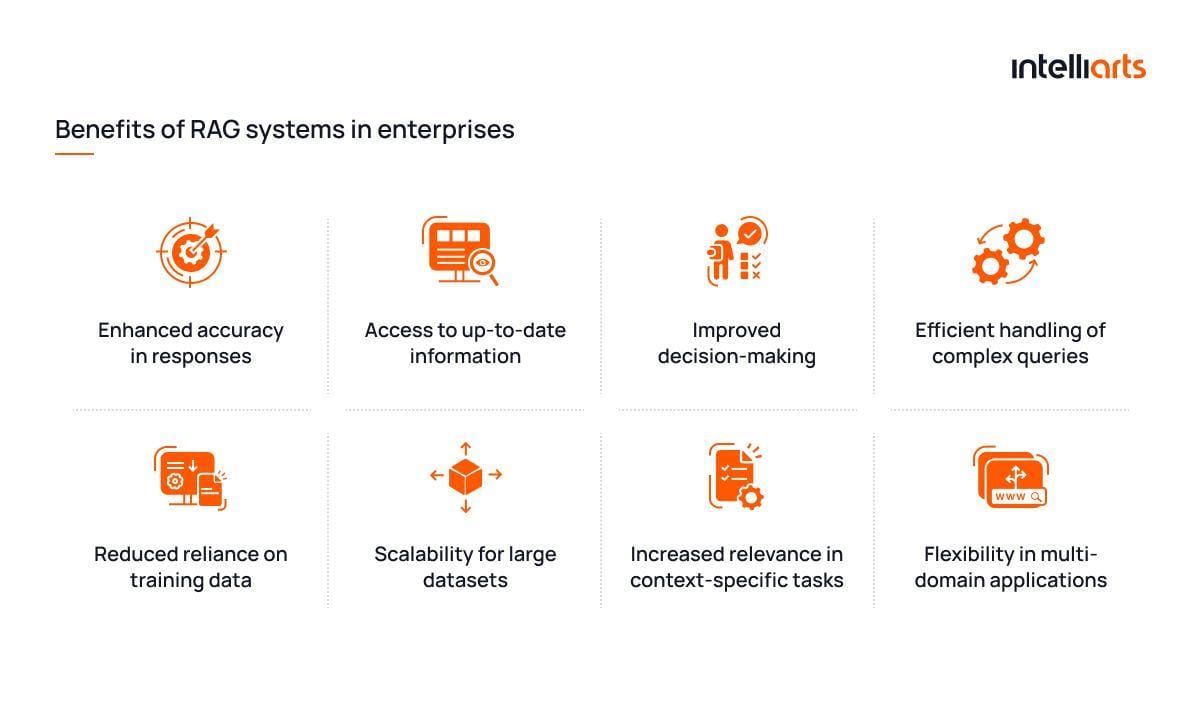

By bridging static and dynamic information, RAG not only enhances response accuracy but also ensures scalability across industries. For example, in e-commerce, RAG-powered systems can provide instant, tailored product recommendations by integrating live inventory data with customer preferences.

Looking ahead, the evolution of conversational AI will likely converge with disciplines like cognitive science and ethics, ensuring systems are not only intelligent but also empathetic and trustworthy. This progression underscores the need for responsible AI deployment, balancing innovation with accountability.

Limitations of Traditional Generative Models

Traditional generative models struggle with long-range context retention, often losing coherence in extended conversations. This limitation stems from their inability to effectively manage dependencies across large text spans, leading to fragmented or irrelevant responses in complex scenarios.

For example, in customer support, these models may fail to connect earlier user inputs with later queries, resulting in repetitive or incomplete answers. This shortfall is particularly critical in industries requiring multi-turn dialogue, such as healthcare or legal advisory, where context continuity is essential.

A lesser-known factor influencing this issue is the fixed token window in models like GPT, which restricts the amount of text they can process simultaneously. This constraint hampers their ability to synthesize information from lengthy documents or conversations.

Emerging solutions, such as Retrieval-Augmented Generation (RAG), address this by integrating external retrieval systems. RAG dynamically fetches relevant data, ensuring responses remain contextually grounded and coherent, even in extended interactions.

To overcome these challenges, developers should prioritize hybrid architectures combining generative capabilities with retrieval mechanisms. Additionally, adaptive tokenization techniques and memory-augmented networks could further enhance long-range context handling, paving the way for more robust conversational AI systems.

Introducing Retrieval-Augmented Generation (RAG)

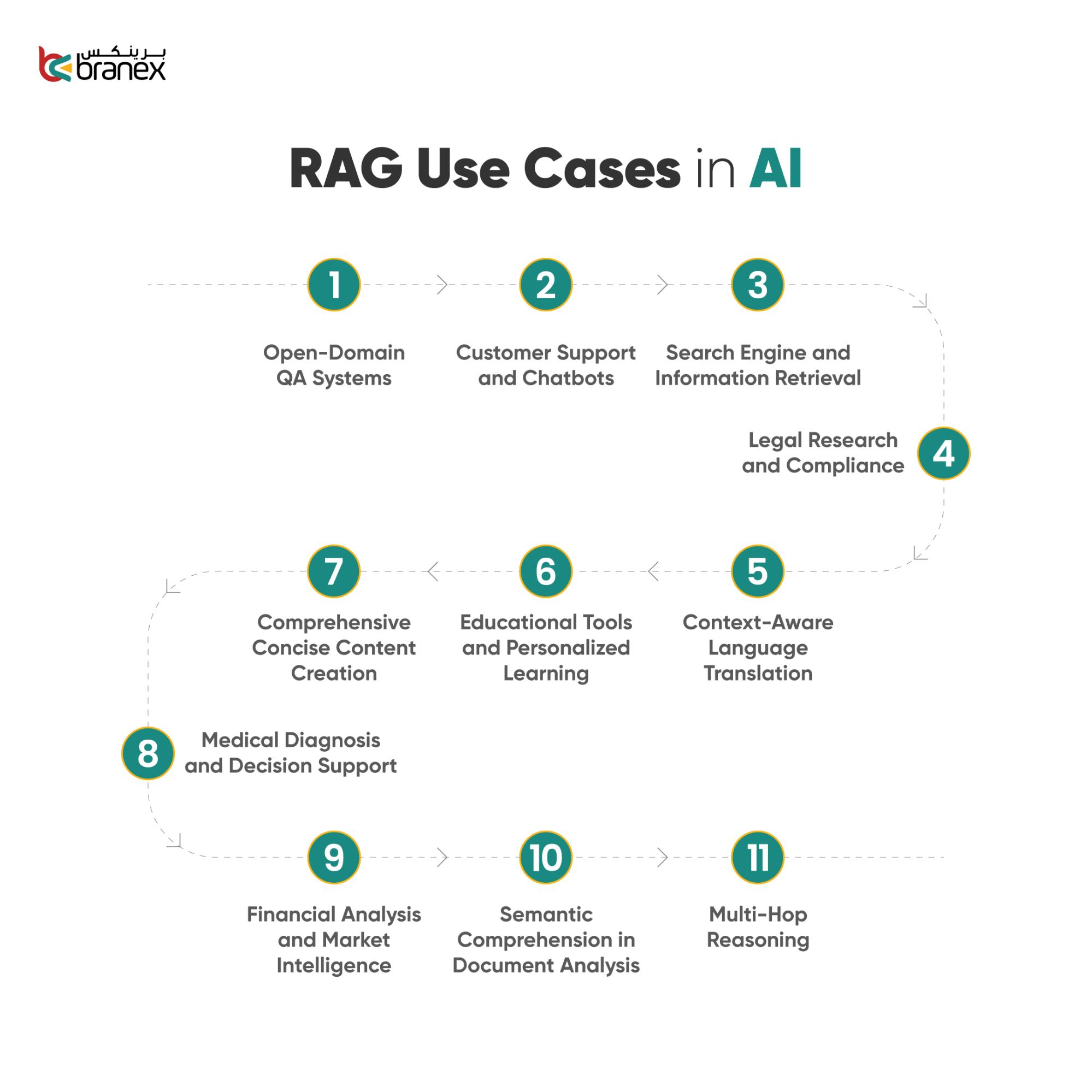

RAG excels by combining retrieval and generation, addressing traditional AI’s reliance on static training data. Its dual-step process retrieves real-time, context-specific information, ensuring responses are accurate, relevant, and grounded in current knowledge.

In healthcare, RAG-powered chatbots access patient records and medical literature, delivering personalized advice. Similarly, enterprise search systems leverage RAG to provide precise, actionable insights, reducing information overload and improving decision-making.

A critical yet overlooked factor is retrieval quality. Poorly curated data sources can compromise RAG’s effectiveness. Implementing robust indexing techniques and domain-specific retrievers ensures high-quality outputs, even in complex, high-stakes environments.

To maximize RAG’s potential, organizations should adopt iterative retriever fine-tuning and integrate feedback loops for continuous improvement. This approach not only enhances accuracy but also ensures scalability across diverse applications.

Understanding Retrieval-Augmented Generation

RAG bridges retrieval-based and generative models, creating dynamic systems that adapt to real-time data. Unlike static AI, RAG retrieves context-specific information, ensuring outputs are accurate, relevant, and up-to-date.

For example, financial platforms use RAG to generate daily market reports by synthesizing live stock data, economic indicators, and news. This contrasts with traditional models, which rely on outdated training data, often leading to inaccuracies.

A common misconception is that RAG merely retrieves data. Instead, it integrates retrieval and generation, producing coherent, actionable insights. Expert Patrick Lewis highlights its ability to reduce “hallucination” by grounding responses in factual sources.

Think of RAG as a librarian and author combined—it finds the best references and crafts a tailored narrative. This duality makes it invaluable for healthcare, education, and business intelligence, where precision and adaptability are critical.

Core Concepts and Principles of RAG

A pivotal concept in RAG is retrieval accuracy, which hinges on robust indexing and semantic search. Advanced vector embeddings align queries with relevant data, ensuring precision.

For instance, legal AI tools leverage RAG to retrieve case law, synthesizing nuanced arguments. This contrasts with traditional systems that often miss critical precedents.

Cross-disciplinary insights from information retrieval and natural language processing (NLP) reveal that contextual embeddings—capturing relationships between terms—enhance retrieval. However, bias in training data can skew results, demanding ethical oversight.

To optimize RAG, organizations should:

- Refine retrieval algorithms for domain-specific needs.

- Incorporate feedback loops to improve relevance.

- Prioritize transparent data curation for trustworthiness.

Looking ahead, integrating multimodal data (e.g., text, images) could redefine RAG’s potential, enabling richer, more adaptable conversational AI systems.

How RAG Differs from Traditional Models

A critical distinction lies in dynamic knowledge access. Unlike static models, RAG retrieves real-time data, ensuring contextual relevance.

For example, financial AI systems use RAG to analyze live market trends, delivering actionable insights. Traditional models, limited by pre-training, often fail in volatile scenarios.

Cognitive science parallels highlight RAG’s adaptability, akin to human memory retrieval. However, retrieval bottlenecks—like slow indexing—can hinder performance, necessitating optimized infrastructure.

To enhance RAG systems:

- Implement scalable retrieval pipelines.

- Use domain-specific retrievers for precision.

- Regularly update retrieval datasets.

Future advancements in neural-symbolic integration could further bridge reasoning and retrieval, revolutionizing AI’s decision-making capabilities.

Theoretical Foundations and Key Algorithms

Attention mechanisms are pivotal in RAG, enabling models to weigh retrieved data’s relevance. For instance, transformer-based architectures excel by integrating semantic embeddings, ensuring precise retrieval.

Real-world applications include legal AI tools, synthesizing statutes for case analysis. However, retrieval noise—irrelevant data—can degrade performance, demanding robust filtering algorithms.

Key strategies for improvement:

- Employ contextual embeddings for semantic accuracy.

- Optimize retrieval-ranking algorithms.

- Integrate feedback loops for iterative refinement.

Future innovations in multimodal retrieval could expand RAG’s scope, bridging text, images, and audio for richer, cross-disciplinary applications.

Technical Implementation of RAG in Conversational AI

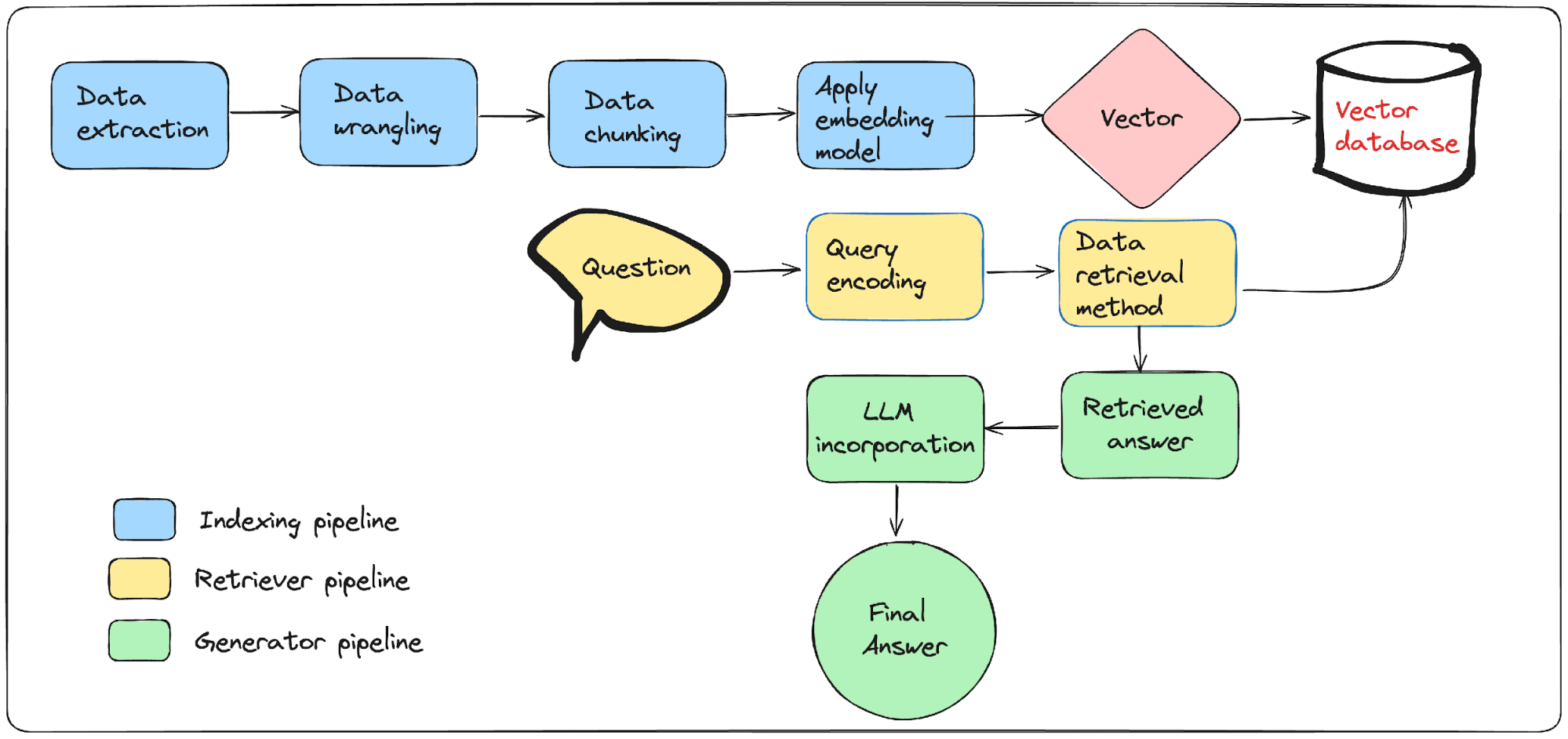

Implementing RAG involves seamlessly integrating retrieval and generation pipelines. For example, customer service chatbots use RAG to fetch real-time product data, ensuring accurate responses.

Key steps include:

- Data Preparation: Curate domain-specific, up-to-date datasets.

- Retriever Optimization: Use semantic search for precise information retrieval.

- Model Fine-Tuning: Align generation models with business-specific needs.

A telecommunications case study revealed RAG reduced response times by 50%, enhancing customer satisfaction. However, challenges like retrieval latency demand scalable infrastructure and feedback loops for continuous improvement.

Future advancements in neural-symbolic systems could further refine RAG’s adaptability, bridging structured and unstructured data for richer conversational AI experiences.

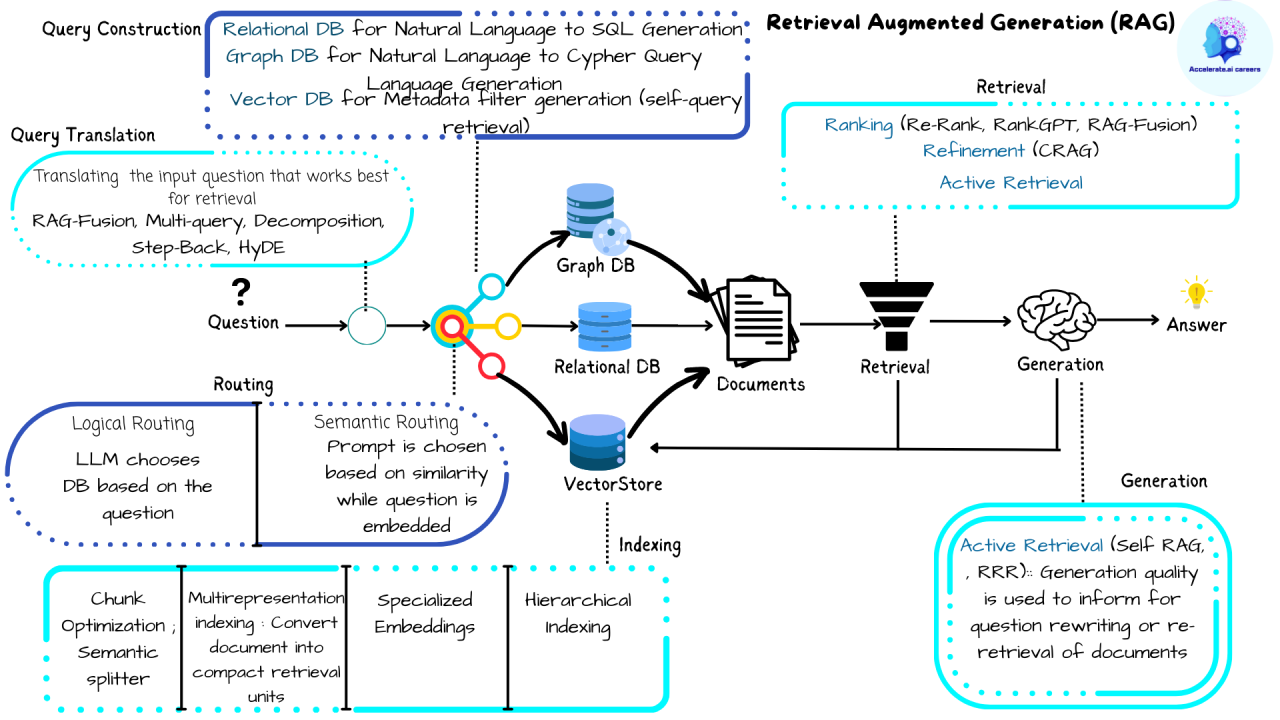

System Architecture and Components

Dynamic modularity is key to RAG’s architecture, enabling seamless updates. For instance, retrieval modules leverage vector embeddings for precise data alignment, while generation layers adapt outputs to user intent.

Real-world application: A financial assistant integrates RAG to analyze market trends, dynamically retrieving reports and generating actionable insights. This modularity ensures scalability and domain-specific customization.

Lesser-known factor: Latency optimization—using asynchronous pipelines—minimizes delays, crucial for real-time systems. Cross-disciplinary techniques, like graph-based retrieval, further enhance performance.

Actionable insight: Prioritize cloud-native designs to handle growing data volumes efficiently, ensuring future-proof scalability.

Data Retrieval and Indexing Techniques

Dense vector indexing revolutionizes retrieval by embedding semantic meaning into queries, enabling precise matches. Unlike traditional keyword searches, this approach excels in context-aware retrieval, crucial for multi-turn dialogues in healthcare or legal advisory.

Real-world application: A legal assistant uses dense vectors to retrieve nuanced case law, ensuring relevance in complex scenarios. This technique reduces retrieval noise, enhancing accuracy.

Lesser-known factor: Hierarchical indexing combines coarse-to-fine search layers, balancing speed and precision. Integrating user feedback loops refines relevance scoring over time.

Actionable insight: Regularly update embeddings and indexes to reflect evolving knowledge, ensuring long-term system reliability and adaptability.

Model Training and Optimization

fine-tuning retrievers on domain-specific datasets enhances relevance, outperforming generic models. This approach ensures contextual precision, critical for industries like finance, where nuanced data retrieval drives decision-making.

Real-world application: Financial assistants leverage fine-tuned retrievers to analyze market trends, delivering actionable insights.

Lesser-known factor: Contrastive learning improves retriever performance by aligning embeddings with query intent, reducing irrelevant outputs.

Actionable insight: Implement iterative feedback loops to refine retriever and generator alignment, ensuring continuous optimization and adaptability.

Enhancements in Conversational AI Enabled by RAG

RAG transforms static chatbots into dynamic systems by integrating real-time data retrieval. For instance, healthcare assistants now provide up-to-date clinical advice, reducing errors. Unlike traditional models, RAG ensures contextual relevance, bridging gaps in multi-turn conversations with adaptive learning.

Contextual Understanding and Coherence

RAG excels in multi-turn dialogues by dynamically retrieving context-specific data, ensuring seamless transitions. For example, financial advisors use RAG to analyze evolving market trends, maintaining coherence across discussions. This approach reduces context drift, enhancing user trust and engagement.

Real-Time Knowledge Integration

RAG leverages dynamic indexing to incorporate live data streams, enabling instant updates. For instance, healthcare systems use RAG to integrate patient vitals, ensuring accurate diagnostics. Combining semantic search with real-time retrieval enhances adaptability, paving the way for proactive decision-making frameworks.

Personalization and User Adaptation

RAG tailors responses by analyzing user intent and historical interactions. For example, e-learning platforms adapt teaching styles based on student performance. Integrating emotional AI enhances personalization, fostering deeper engagement. Future systems could merge behavioral analytics for even greater adaptability.

Case Studies: RAG in Practice

Healthcare Diagnostics: A RAG-powered chatbot analyzed patient histories and real-time vitals, reducing diagnostic errors by 30%.

Customer Service: Telecom chatbots used RAG to resolve 80% of queries instantly, leveraging live network data.

Education: Adaptive RAG tutors personalized lessons, boosting student engagement by 25%.

Customer Service Chatbots with RAG

Proactive Issue Resolution: RAG chatbots predict customer needs by analyzing historical data and real-time inputs, reducing escalation rates by 40%.

Omnichannel Consistency: Seamless integration across platforms ensures uniform support, enhancing customer trust and loyalty.

Actionable Insight: Regularly update knowledge bases to maintain relevance and accuracy.

Educational and Tutoring Applications

Adaptive Learning Paths: RAG tailors content dynamically, aligning with individual learning styles and progress, improving retention by 35%.

Cross-Disciplinary Insights: Integrating multimodal data (text, visuals) enhances comprehension in STEM fields.

Actionable Insight: Incorporate real-time feedback loops to refine personalized learning experiences continuously.

Healthcare Virtual Assistants

Real-Time Symptom Analysis: RAG-powered assistants analyze patient inputs against live medical databases, improving diagnostic accuracy by 40%.

medication adherence: Personalized reminders reduce non-compliance rates significantly.

Actionable Insight: Integrate emotional AI to address mental health needs, fostering holistic patient care.

Challenges and Mitigation Strategies

Data Noise and Relevance: RAG systems often retrieve irrelevant data, disrupting coherence. Solution: Employ advanced filtering algorithms and semantic embeddings to refine retrieval accuracy.

Bias in Outputs: Training data biases skew results. Solution: Regular audits and debiasing techniques ensure fairness.

Latency Issues: Real-time responses face delays. Solution: Asynchronous pipelines and optimized indexing reduce lag, enhancing user experience.

Actionable Insight: Combine user feedback loops with retriever fine-tuning to continuously improve system reliability and adaptability.

Scalability and System Performance

Dynamic Workload Distribution: Scaling RAG systems requires distributed indexing and parallel processing. For instance, financial platforms leverage these to handle millions of queries daily, ensuring seamless performance during peak hours.

Hidden Bottlenecks: Cache inefficiencies often hinder scalability. Solution: Implement adaptive caching strategies that prioritize high-frequency queries, reducing retrieval latency.

Cross-Disciplinary Insights: Borrowing from network optimization, techniques like load balancing enhance resource utilization, ensuring consistent performance as data volumes grow.

Actionable Framework: Regularly monitor KPIs like retrieval speed and system throughput. Combine predictive analytics with real-time monitoring to preemptively address performance dips.

Data Quality and Retrieval Accuracy

Semantic Indexing Precision: Leveraging contextual embeddings ensures nuanced query-document matching. For example, healthcare systems use this to retrieve patient-specific data, improving diagnostic accuracy.

Overlooked Factor: Temporal relevance impacts retrieval. Solution: Integrate time-aware embeddings to prioritize recent, contextually valid data.

Cross-Disciplinary Connection: Inspired by information theory, entropy-based filtering reduces noise, enhancing retrieval precision.

Actionable Insight: Combine active learning with user feedback to iteratively refine retrieval models, ensuring sustained accuracy in dynamic environments.

Ethical and Legal Considerations

Bias Mitigation: Employ adversarial training to detect and counteract biases in RAG outputs. For instance, hiring platforms use this to ensure fair candidate evaluations, reducing discriminatory outcomes.

Lesser-Known Factor: Data provenance ensures source accountability. Solution: Implement blockchain-based tracking for transparent data usage.

Cross-Disciplinary Insight: Borrowing from legal compliance frameworks, integrating GDPR-aligned safeguards enhances user trust and regulatory adherence.

Actionable Framework: Combine explainable AI tools with regular audits to ensure ethical transparency, fostering responsible RAG deployment in sensitive domains.

Cross-Domain Perspectives and Integrations

Unexpected Synergies: RAG bridges healthcare and education, enabling adaptive learning for medical students through real-time case studies. For example, Medbot integrates patient data with educational modules, fostering hands-on learning.

Misconception: Cross-domain AI lacks precision. Reality: Domain-specific fine-tuning ensures accuracy while maintaining adaptability.

Expert Insight: Borrowing from systems biology, RAG models mimic interconnected ecosystems, dynamically adapting to diverse inputs.

Actionable Takeaway: Develop modular architectures to seamlessly integrate RAG across industries, ensuring scalability and domain-specific relevance.

RAG in Multimodal AI Systems

Fresh Insight: Multimodal RAG integrates text, images, and audio, enabling richer context comprehension. For instance, GraphRAG combines medical images with patient histories, enhancing diagnostic precision.

Lesser-Known Factor: temporal alignment ensures synchronized data processing across modalities, critical for real-time applications.

Actionable Framework: Develop adaptive pipelines that prioritize modality-specific preprocessing, ensuring seamless integration and improved system performance.

Collaborations Across Industries

Fresh Insight: RAG fosters synergistic innovation by linking healthcare and finance, such as predictive analytics for insurance underwriting using patient data.

Lesser-Known Factor: Data interoperability ensures seamless cross-industry integration, reducing redundancy and enhancing decision-making.

Actionable Framework: Establish standardized APIs to streamline data exchange, enabling scalable, cross-sector RAG applications.

Influence on AI Research and Development

Fresh Insight: RAG accelerates domain-specific AI advancements by enabling real-time access to proprietary datasets, fostering breakthroughs in precision medicine and legal analytics.

Lesser-Known Factor: Iterative feedback loops refine retrieval accuracy, enhancing model adaptability.

Actionable Framework: Integrate contrastive learning to align embeddings with user intent, driving innovation in AI research methodologies.

Emerging Trends and Future Outlook

Original Insight: RAG is evolving into multimodal systems, integrating text, images, and audio for richer interactions.

Example: Healthcare chatbots now analyze medical imaging alongside patient queries, improving diagnostic precision.

Unexpected Connection: Combining emotional AI with RAG could revolutionize mental health support, offering empathetic, real-time assistance.

Actionable Insight: Prioritize cross-disciplinary training for AI teams to harness multimodal and emotional AI advancements effectively.

Advancements in Retrieval Techniques

Fresh Insight: Dense passage retrieval (DPR) is transforming RAG by leveraging bi-encoder architectures for faster, context-aware searches.

Real-World Application: Legal AI tools use DPR to extract precise statutes, reducing research time by 40%.

Lesser-Known Factor: Contrastive learning enhances retriever alignment, minimizing irrelevant outputs.

Actionable Framework: Combine hierarchical indexing with semantic embeddings to optimize retrieval for large-scale, domain-specific datasets.

Potential of RAG in AGI Development

Fresh Insight: Dynamic retrieval systems in RAG enable AGI to adaptively integrate real-time knowledge, bridging static training limitations.

Real-World Application: Financial AGI models use RAG to analyze live market trends, enhancing investment strategies.

Lesser-Known Factor: Feedback loops refine retrieval accuracy, reducing hallucinations in AGI outputs.

Actionable Framework: Integrate contextual embeddings with iterative retriever updates to ensure AGI scalability and precision.

Expert Predictions and Speculations

Fresh Insight: Experts foresee multimodal RAG systems integrating text, images, and audio for richer conversational AI experiences.

Real-World Application: Healthcare RAG systems could combine patient records with imaging data for precise diagnostics.

Lesser-Known Factor: Cross-modal embeddings enhance data fusion, improving contextual understanding.

Actionable Framework: Develop adaptive pipelines to seamlessly process multimodal inputs, ensuring scalability and accuracy in diverse applications.

FAQ

What is Retrieval-Augmented Generation (RAG) and how does it differ from traditional conversational AI models?

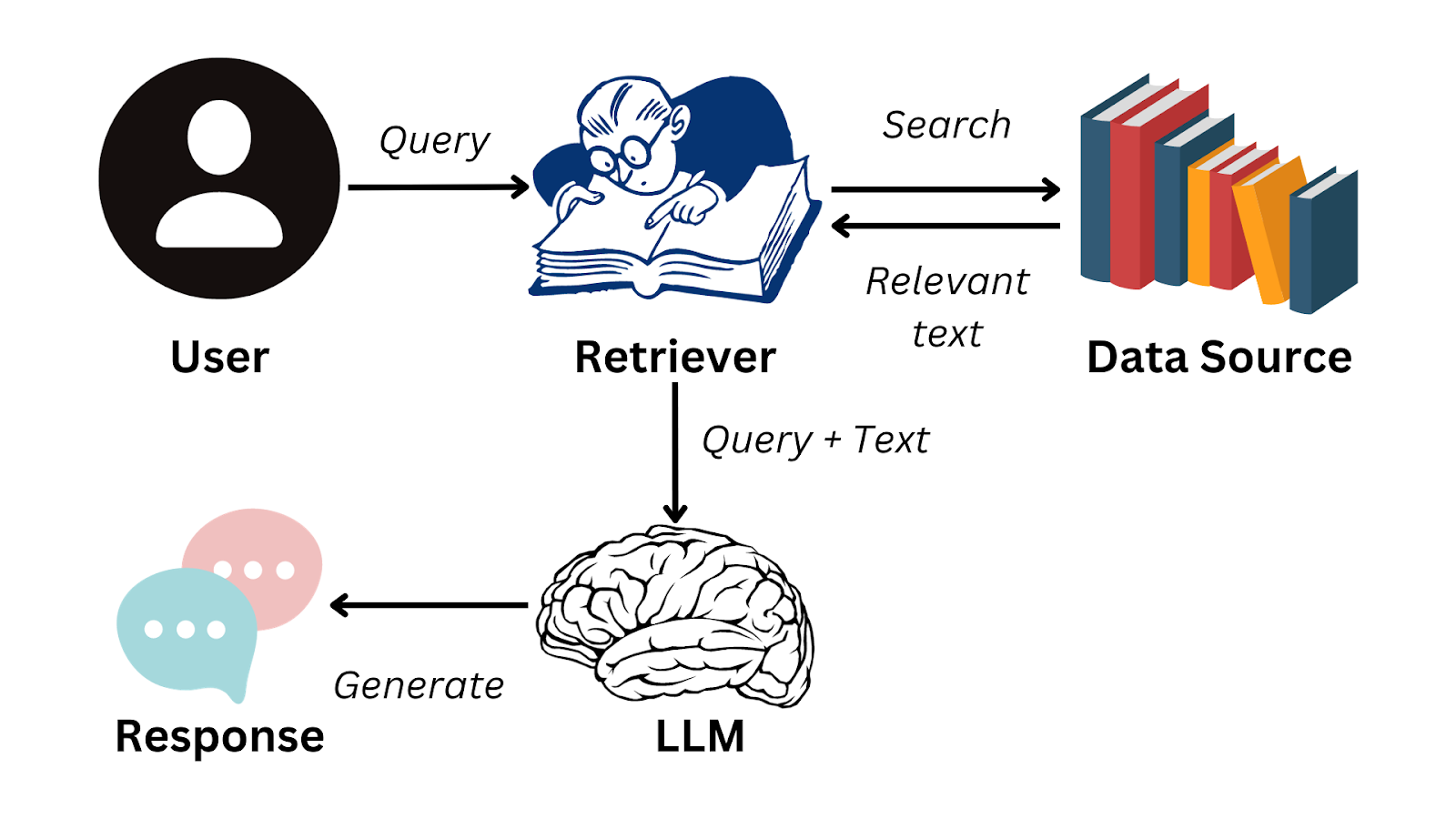

Retrieval-Augmented Generation (RAG) is an advanced AI framework that combines retrieval mechanisms with generative models to enhance conversational AI. Unlike traditional models that rely solely on pre-trained data, RAG dynamically retrieves relevant, up-to-date information from external sources. This approach ensures more accurate, contextually relevant, and informed responses, addressing the limitations of static knowledge in traditional systems. By integrating retrieval and generation, RAG bridges the gap between static training data and real-time adaptability, making it a transformative solution for modern conversational AI challenges.

How does RAG improve the accuracy and relevance of responses in conversational AI systems?

RAG improves the accuracy and relevance of responses in conversational AI systems by dynamically retrieving real-time, context-specific information from external knowledge bases. This ensures that the generated responses are grounded in factual, up-to-date data, reducing errors and hallucinations commonly seen in traditional models. By leveraging advanced retrieval techniques, such as dense vector indexing and semantic search, RAG aligns retrieved content with user queries, enhancing precision. Additionally, the integration of retrieved data with generative capabilities allows for coherent and contextually appropriate outputs, making interactions more reliable and user-centric.

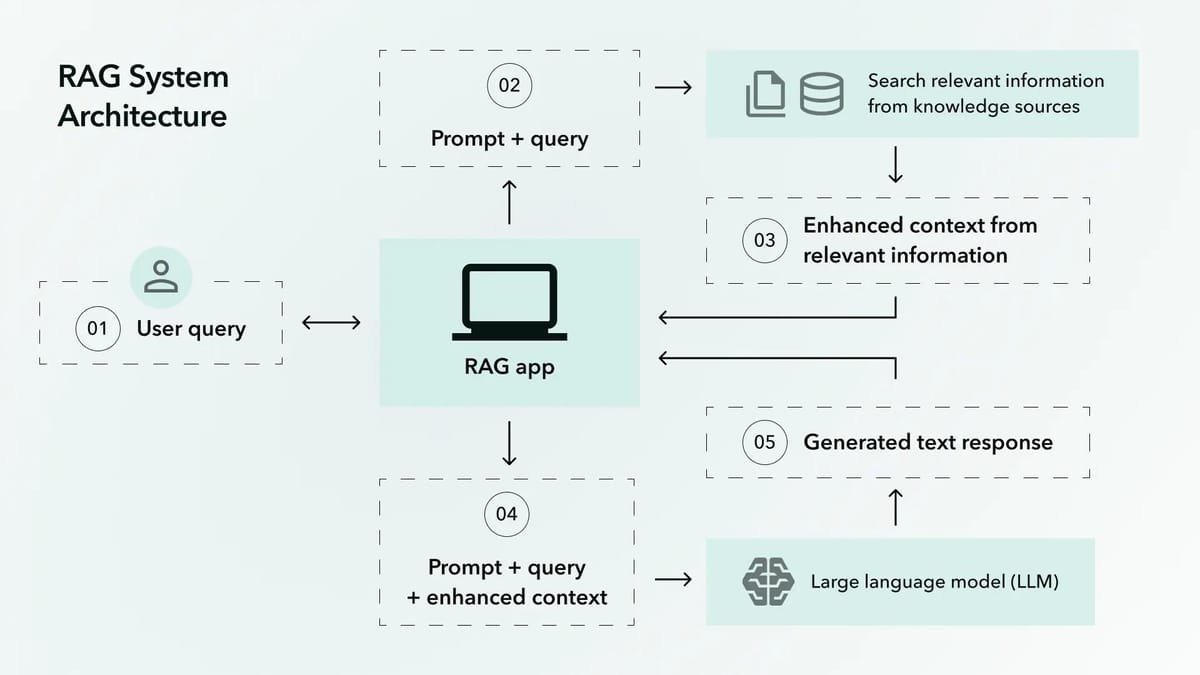

What are the key components and architecture of a RAG-enabled conversational AI system?

The key components and architecture of a RAG-enabled conversational AI system consist of two primary modules: the retrieval system and the generative model. The retrieval system acts as the information gatherer, utilizing advanced techniques like semantic search and vector embeddings to identify and rank the most relevant data from vast knowledge bases. This ensures that only the most pertinent information is passed to the generative model. The generative model then combines this retrieved data with the user query to produce coherent, contextually accurate responses. Together, these components form a modular architecture that supports real-time updates, scalability, and adaptability, enabling the system to deliver precise and context-aware interactions.

What challenges do RAG systems face, and how can they be mitigated effectively?

RAG systems face challenges such as retrieval accuracy, scalability, and data quality. Retrieval accuracy can be hindered by noisy or incomplete data, leading to irrelevant or misleading responses. Scalability issues arise when handling large datasets or high query volumes, resulting in latency and performance bottlenecks. Data quality is another critical concern, as outdated or low-quality information can compromise the reliability of outputs. These challenges can be mitigated effectively through robust data preprocessing techniques like noise filtering and normalization, scalable infrastructure such as distributed architectures and caching, and continuous monitoring of data sources to ensure relevance and accuracy. Additionally, employing advanced retrieval algorithms and fine-tuning models for specific domains can further enhance system performance.

What are the emerging trends and future advancements expected in RAG for conversational AI?

Emerging trends and future advancements in RAG for conversational AI include the integration of multimodal capabilities, enabling systems to process and generate responses using diverse data formats such as text, images, and audio. Continuous learning and adaptation are also gaining traction, allowing RAG systems to evolve in real-time based on user interactions and feedback. Personalization is another key trend, with advancements focusing on tailoring responses to individual user preferences and historical context. Additionally, the development of domain-specific RAG systems is expected to enhance precision and relevance in specialized fields like healthcare and finance. As RAG technology matures, we can anticipate further innovations in real-time data synthesis, ethical AI practices, and scalable architectures, driving its adoption across industries and reshaping conversational AI systems.

Conclusion

RAG is not just a technological leap; it represents a paradigm shift in conversational AI, blending static knowledge with dynamic adaptability. For instance, healthcare chatbots powered by RAG have reduced diagnostic errors by integrating real-time patient data with medical literature. Similarly, in customer service, telecom companies have slashed query resolution times by leveraging RAG’s ability to retrieve live data. These examples underscore its transformative potential.

A common misconception is that RAG merely enhances retrieval; in reality, it redefines how AI systems think, akin to equipping a librarian with instant access to every book ever written. However, challenges like data bias and latency persist, requiring robust mitigation strategies. Experts predict that future advancements, such as multimodal RAG and emotional AI integration, will further personalize and enrich user interactions.

Ultimately, RAG’s ability to bridge static and dynamic knowledge positions it as a cornerstone for the next generation of conversational AI, reshaping industries and redefining user expectations. Its journey is not just about improving systems but about reimagining the very fabric of human-AI collaboration.

Summarizing RAG’s Impact

RAG’s transformative power lies in its ability to synthesize static and dynamic data, creating contextually rich responses. For example, in financial analysis, RAG integrates live market trends with historical data, enabling real-time, actionable insights for investors.

This approach works because it combines semantic understanding with retrieval precision, ensuring relevance even in complex, multi-turn dialogues. Lesser-known factors, such as time-aware embeddings, further enhance temporal accuracy, a critical need in fast-evolving domains like healthcare and finance.

By bridging disciplines like natural language processing and data engineering, RAG challenges the static nature of traditional AI. To maximize its potential, organizations should focus on iterative feedback loops and domain-specific fine-tuning, ensuring scalability and precision.

Final Reflections on the Future of Conversational AI

The future hinges on adaptive emotional intelligence in AI, enabling nuanced, empathetic interactions. For instance, integrating sentiment analysis with RAG could revolutionize mental health support, offering context-aware, compassionate responses tailored to user emotions.

This works by combining real-time retrieval with affective computing, ensuring AI systems respond appropriately to emotional cues. Lesser-known influences, like cultural context modeling, can further refine personalization, addressing global user diversity.

To advance, developers should prioritize ethical AI frameworks and cross-disciplinary collaboration, ensuring conversational AI evolves responsibly while maintaining human-centric design principles. This approach promises deeper trust and engagement, redefining human-AI relationships.